Target detection and counting method for Acetes chinensis fishing vessels operation based on improved YOLOv7

-

摘要:

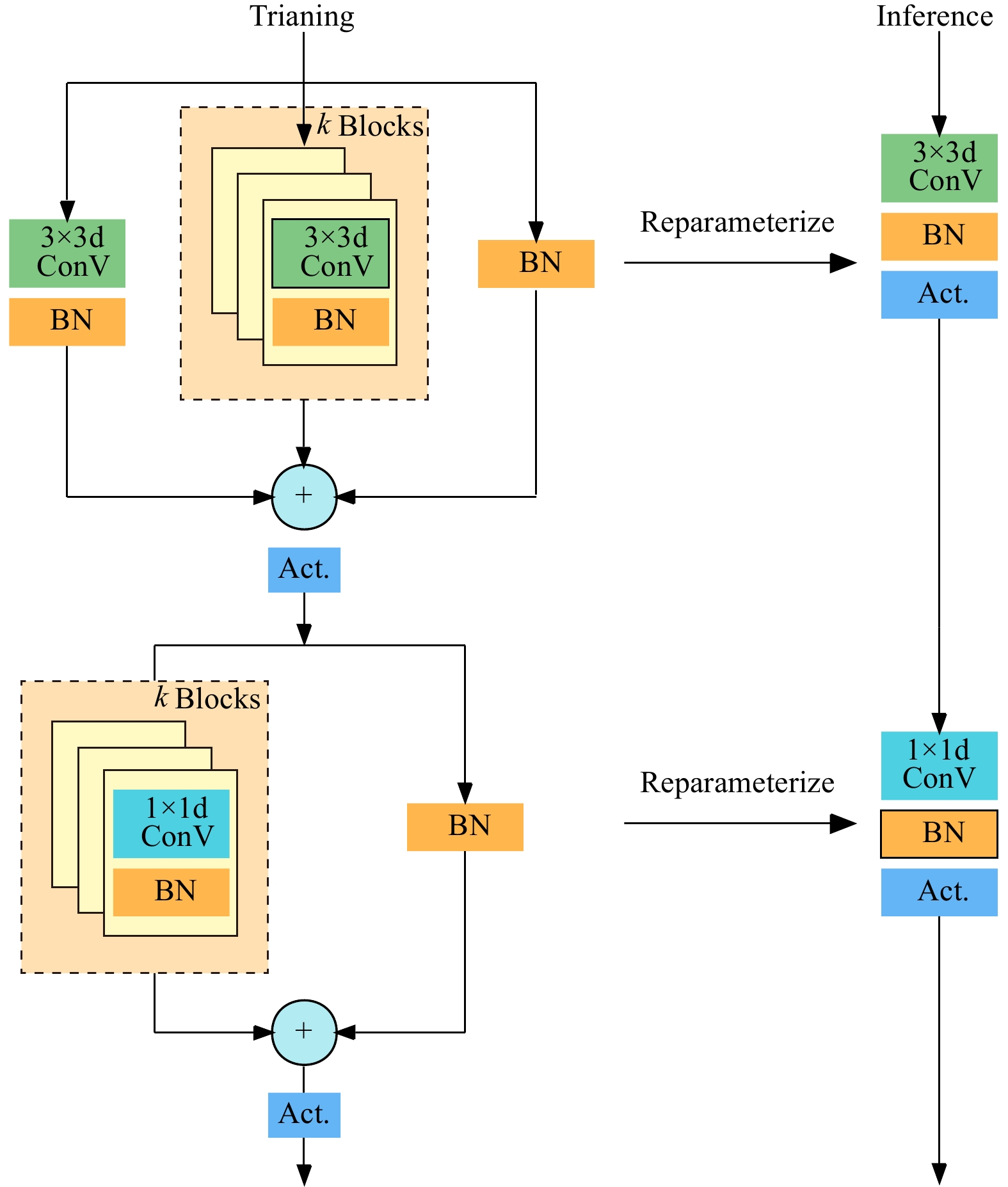

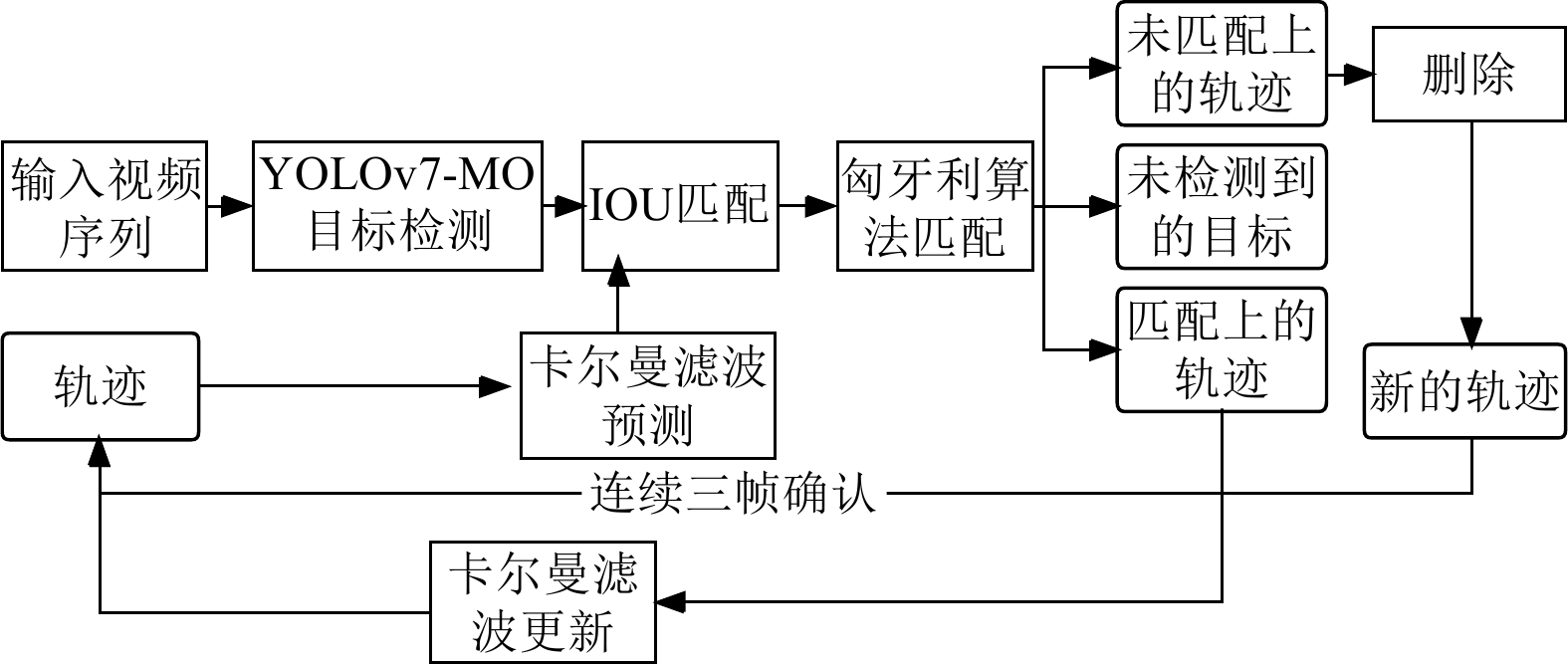

渔船捕捞信息量化是开展限额捕捞精细化管理的前提,为解决中国毛虾限额捕捞目标识别和信息统计量化问题,研究了在中国毛虾限额捕捞渔船上安装电子监控(electronic monitoring,EM)设备,并基于YOLOv7提出一种改进的目标检测算法(YOLOv7-MO)和目标计数算法(YOLOv7-MO-SORT)。YOLOv7-MO目标检测算法采用MobileOne作为主干网络,在输出端head部分加入C3模块,并完成剪枝操作;YOLOv7-MO-SORT目标计数算法将SORT(simple online and realtime tracking)算法中的Fast R-CNN替换为YOLOv7-MO,用于检测捕捞作业中抛出的锚和装有毛虾的筐。采用卡尔曼滤波和匈牙利匹配算法对检测到的目标进行跟踪预测,设置碰撞检测线、时间戳、阈值和计数器,实现对捕捞作业过程中渔获毛虾筐数和下网数量计数。结果表明:1)改进后的YOLOv7-MO在测试集上的平均检测精度、召回率、F1得分分别达到了97.3%,96.0%,96.6%,相比YOLOv7模型分别提升了2.0、1.1和1.5个百分点。2)改进后的YOLOv7-MO模型大小、参数量和浮点运算数分别为64.0 MB、32.6 M、39.7 G,相比YOLOv7模型分别缩小了10.2%、10.6%和61.6%。3)以YOLOv7-MO为检测器的SORT算法毛虾捕捞作业计数准确率在统计毛虾筐数和下网数量上分别达到80.0%和95.8%。YOLOv7-MO在提高检测精度的同时减轻了模型量级,提高了检测效率。结果表明,该研究能够为实现渔船捕捞作业信息记录自动化和智能化提供方法,为毛虾限额捕捞管理提供决策参考依据。

Abstract:Overfishing has been one of the greatest risks to marine biodiversity in the world in recent years. Thus, the production of many catches is in a yearly decline, including Acetes chinensis. At the same time, an Acetes chinensis quota has been introduced to promise the marine conservation of biodiversity in China in 2020. Accurate and rapid quantification of fishing vessel fishing information can be one of the most important prerequisites to implementing the fine management of quota fishing. This study aims to perform the target identification and statistics quantification of Acetes chinensis quota fishing. An electronic monitoring (EM) device was installed on Acetes chinensis quota fishing vessels to monitor the main operation process of fishing vessels. An improved target detection algorithm (YOLOv7-MO) and target counting algorithm (YOLOv7-MO-SORT) using YOLOv7 were proposed to realize the target detection and statistics of Acetes chinensis quota fishing vessels. The YOLOv7-MO target detection algorithm used the MobileOne as the backbone network. The C3 modules were added to the head part of the output during the pruning operation. The YOLOv7-MO-SORT target counting algorithm was selected to replace the Faster R-CNN from the SORT (Simple Online and Realtime Tracking) algorithm replaced by YOLOv7-MO for the detection of anchors thrown during fishing operations and baskets containing Acetes chinensis. Kalman filtering and Hungarian matching algorithms were used to track and predict the detected targets, according to the characteristics of actual production operations. The collision detection lines, timestamps, thresholds, and counters were set to count the number of baskets of Acetes chinensis caught and nets during the fishing operation. The results show: (1) The improved YOLOv7-MO was achieved in average detection accuracy, recall, and F1 score of 97.3%, 96.0%, and 96.6%, respectively, on the test set, which were improved by 2.0, 1.1 and 1.5 percentage points, compared with the original model. (2) The improved YOLOv7-MO model size, the number of parameters, and the number of floating-point operations were 64.0 MB, 32.6 M, and 39.7 G, respectively, which were 10.2%, 10.6%, and 61.6% smaller than those of the YOLOv7 model. (3) The accuracy of the SORT algorithm Acetes chinensis fishing operation count with YOLOv7-MO as the detector reached 80.0% and 95.8% in counting the number of Acetes chinensis baskets and the number of nets, respectively. YOLOv7-MO reduced the model magnitude while improving the detection accuracy and efficiency. The SORT algorithm with the YOLOv7-MO as the detection head was also achieved in more accurate statistical quantification of the main operational information of fishing vessels. This function can be expected to facilitate the management and recording of fishing vessel operations, in order to avoid some drawbacks of the traditional manual recording of fishing vessel operations. The gross shrimp basket count statistics can provide better convenience to calculate the fishing parameters, such as the gross shrimp CPUE. The identification can be realized to count the fishing vessel operation of hairy shrimp fishing. The finding can also provide a strong reference to realize the automation and intelligence of recording fishing operations in offshore vessels, particularly for the decision-making on the hairy shrimp quota fishing.

-

Keywords:

- computer vision /

- target detection /

- Acetes chinensis /

- quota fishing /

- electronic monitoring systems /

- YOLOv7 /

- SORT

-

0. 引 言

根据2017年世界蓝莓大会暨高峰论坛(云南曲靖)的预测,中国在2026—2027年度将成为世界最大的蓝莓生产国[1]。据相关数据,全国蓝莓栽培面积6.64万hm2,总产量34.72万t,鲜果产量23.47万t[2]。蓝莓采摘工作量很大,行业正逐步从人工采摘向智能化采摘转型,其中确定蓝莓的成熟度是智能化采摘的首要任务。因此,在自然环境下准确识别蓝莓的成熟度尤为重要。

目前国内外研究学者采用深度学习方法对水果成熟度级别进行分类研究均取得了一定的进展。MUTHA等[3]采用深度学习技术检测西红柿的成熟度,创建了图像的自定义数据集,并使用卷积神经网络YOLOv3检测西红柿的成熟度并确定其位置。KO等[4]设计了基于物体检测深度学习模型的番茄成熟度实时分类系统,该系统成熟度分类的计算速度为97帧/s(frames per second),平均准确率为91.3 %。SU等[5]提出了一种SE-YOLOv3-MobileNetV1模型,该模型能够区分4种成熟度的番茄,番茄的平均精度值达到97.5%。FAN等[6]为了提高草莓鉴定和采摘的准确性,用经过暗通道处理后的图片进行训练以增加夜间低照度草莓的识别率。DANH等[7]采用深度迁移学习技术对番茄进行分类研究,试验结果表明,VGG19模型在检测樱桃番茄成熟程度时精度为94.14%。CHEN等[8]提出了一种柑橘类水果成熟度检测方法,该方法结合了视觉显著性和卷积神经网络,以识别柑橘类水果的3个成熟水平。蓝莓果实的成熟度对于蓝莓种植者和蓝莓育种专家进行蓝莓采摘非常重要。MACEACHERN等[9]将YOLOv4应用于蓝莓成熟度检测,结果表明该算法对蓝莓成熟度检测具有较高的准确性,但由于YOLOv4模型的计算量较大,后续迁移到小型嵌入式设备会导致算法速度显著降低。王立舒等[10]设计改进了YOLOv4-Tiny网络,在遮挡与光照不均等复杂场景中,对蓝莓的不成熟、欠成熟、成熟的平均精度能达到96.24%,平均检测时间为5.723 ms,可以同时满足蓝莓果实识别精度与速度的需求。

上述研究结果表明采用深度学习模型可有效识别田间水果的成熟度。但对于蓝莓果实成熟度检测方面,未考虑成熟度为过成熟的蓝莓检测,且田间复杂自然环境下蓝莓果实呈簇状且果实密集分布,存在蓝莓果实相互遮挡或者蓝莓果实与蓝莓枝叶相互遮挡的情况,还存在枝叶遮光的情况,使得蓝莓果实光照程度不一。由于这些干扰因素,田间复杂环境下蓝莓果实的精准检测还存在一定难度和挑战。另外原始深度学习模型体积和计算复杂度都很大,受田间工作的边缘设备性能限制,在这些设备上部署模型时难以保证实时性检测的需求。因此进行模型轻量化研究较为重要。本研究提出一种基于YOLOv8的蓝莓检测方法,改进YOLOv8的主干网络,实现了模型的轻量化,在主干网络中嵌入注意力机制,并改进边界框回归损失函数。实现在减少模型参数量的同时,提高模型的检测精度和检测速度,为田间复杂环境下蓝莓采摘机器人进行采摘提供技术支持。

1. 图像数据获取与预处理

1.1 图像采集方法

图像采集于辽宁省沈阳市沈河区东陵路沈阳农业大学的小浆果科研实践基地,每株蓝莓的行距约为1.1 m,适合蓝莓采摘机器人采摘。分别采集晴天和阴天下的蓝莓图像,时间为上午9:00,中午12:30,下午3:00。采集图像所使用的设备是iphone13 Pro 自带的相机,长焦镜头光圈为2.8,广角镜头光圈为1.5,共采集蓝莓图像1 000张。蓝莓图像的分辨率为4 032像素×3 024像素,图像格式为JPG。

根据蓝莓采摘机器人在蓝莓果园进行自动化采摘的实际情况及专家的建议,将蓝莓的成熟度分为4个类别,分别为未成熟、欠成熟、成熟、过成熟,具体如图1所示。

蓝莓在田间生长时存在背景叶干扰、枝叶遮挡、果实重叠和逆光等复杂环境,如图2所示。

1.2 图像数据预处理

为了增强数据的多样性,使模型得到更好的训练,需要进行图像预处理。首先按照7∶2∶1的比例将采集图像分为训练集、验证集、测试集。然后对训练集进行数据增强,本研究利用深度卷积生成对抗网络(convolution generative adversarial networks,DCGAN)[11]生成蓝莓图像进行数据增强,将所采集的蓝莓图像输入到DCGAN网络之中,生成9 000张蓝莓图像。测试集图像中含有过成熟果实224,成熟果实1 053,欠成熟果实342,未成熟果实207。然后使用labelImg图像数据标注软件来对蓝莓图像进行标注。

2. MSC-YOLOv8目标检测网络

2.1 YOLOv8网络架构

YOLOv8是YOLO系列推出的目标检测模型之一。YOLOv8提供了5个版本,分别为YOLOv8n、YOLOv8s、YOLOv8m、YOLOv8l和YOLOv8x。在这几个版本中,YOLOv8n虽然识别精度稍低,但是其模型架构最轻量化,检测速度最快[12]。基于这一特性,本研究经过综合考虑,选择YOLOv8n作为蓝莓果实成熟度识别任务的基础模型。

YOLOv8由输入端、主干网络(Backbone)、颈部网络(Neck)、头部网络(Head)4部分组成[13]。主干网络有5个卷积块、4个C2f块、1个SPPF块构成。C2f块相对于YOLOv5主干网络中的C3块,显著减少了参数数量,从而降低计算量。颈部网络采用了路径聚合网络(path aggregation network,PANet)[14],与特征金字塔网络(feature pyramid network,FPN)[15]相比,PANet引入了一种自下向上的路径机制,使得底层信息可以更为顺畅地传递到高层顶部。头部网络将回归分支和预测分支进行分离,这样做收敛更快,效果更好。头部网络的设计将回归分支和预测分支进行了分离,这种设计策略有助于模型在训练过程中更快地收敛,并提升整体性能。

2.2 改进后的YOLOv8目标检测网络

田间复杂环境下的目标检测,在保证蓝莓成熟度分类检测的实时性的同时,应尽可能提高网络对蓝莓果实识别的准确性。本研究基于YOLOv8做了3个改进:

1)将原模型主干网络替换为轻量型MobileNetV3结构。

2)在主干网络中引入卷积注意力机制模块(convolutional block attention module,CBAM)。

3)将原模型的边界框损失函数替换为SIoU。

将改进后的网络命名为MSC-YOLOv8目标检测网络,其主要包含4个部分:输入层、主干特征提取网络、路径聚合网络、输出层,其网络结构如图3所示,首先输入层接受一张640像素×640像素的蓝莓图像,其次送入主干特征提取网络进行特征提取,蓝莓特征图再次送入路径聚合网络进行浅层特征与深层特征的上下融合,最后进入输出层输出预测框并给出类别。

![]() 图 3 MSC-YOLOv8模型架构注:Conv2d为卷积,Concat为通道数相加的特征融合方式,BN为批量归一化,CBAM为卷积注意力块,MNI为MNIneck,Upsample代表上采样。Figure 3. Network structure of MSC-YOLOv8Note: Conv2d is convolution, Concat is the feature fusion method of adding the number of channels, BN is bath normalization, CBAM is the convolutional attention block, MNI represents MNIneck, and Upsample represents upsampling.

图 3 MSC-YOLOv8模型架构注:Conv2d为卷积,Concat为通道数相加的特征融合方式,BN为批量归一化,CBAM为卷积注意力块,MNI为MNIneck,Upsample代表上采样。Figure 3. Network structure of MSC-YOLOv8Note: Conv2d is convolution, Concat is the feature fusion method of adding the number of channels, BN is bath normalization, CBAM is the convolutional attention block, MNI represents MNIneck, and Upsample represents upsampling.MSC-YOLOv8的主干特征提取网络由轻量型网络MobileNetV3和CBAM注意力机制构成,具体为CBH模块、MNI模块、CBAM模块、SPPF模块,如图3所示。CBH模块由一个卷积层连接一个批量正则化(bath normalization,BN)再连接一个HSwish函数组成,用作蓝莓图像的第一次处理;MNI模块用作蓝莓图像特征的提取;CBAM注意力机制则进一步加强特征的提取。蓝莓图像经过主干网络(backbone)获得3个有效特征层,并将这3个有效特征层传入路径聚合网络(neck)。路径聚合网络通过自上而下和自下而上的融合方式将这3个有效特征层进行特征融合。最后将融合后的特征分别送入3个头部网络,输出预测框并给出类别。

2.2.1 改进主干特征提取网络

在田间复杂环境下进行实时检测时,由于原模型的体积和计算复杂度较大,直接部署于边缘设备面临挑战。为实现模型的高效运行,需进行模型轻量化设计。模型轻量化旨在减小模型规模、降低计算需求,同时尽量保持或提升模型的性能。在这一背景下,MobileNetV3作为一种轻量级网络模型,相较于原YOLOv8主干网络,显著减少了参数量和计算量,为在边缘设备上的实时检测提供了更为可行的解决方案[16]。所以本研究采用MobileNetV3作为改进YOLOv8模型的主干网络。MobileNetV3是采用NAS(neural architecture search)搜索的一种算法[17]。在MobileNetV3中,大量使用1×1和3×3的卷积代替原版本5×5的卷积,这一改变显著减少了参数量。这样设计不仅保留了高维特征空间,同时也减少了反向传播的延迟。此外,MobileNetV3还引入残差块和轻量级的注意力机制,这种轻量级的注意力模块被设计为瓶颈结构,可以更好地提取特征。

在原版本的基础上,MobileNetV3重新设计了耗时层结构,减少第一层卷积层的卷积核个数,由32减少为16,并且精简了最后一层。另外,为了进一步提高模型的性能MobileNetV3使用了性能更好的激活函数,将原来的swish激活函数改为h-swish激活函数,该激活函数的计算和求导过程更加简单,因此减小了计算量。经过这些优化和改进,MobileNetV3实现了模型的轻量化,具有较小的模型体积和较低的计算复杂度。

MobileNetV3网络的结构如表1所示,本研究使用的是MobileNetV3的small版本,目的是降低模型的参数量。图4为MobileNetV3中特有的的bneck结构。

表 1 MobileNetV3网络结构Table 1. Network structure of MobileNetV3输入

Input模块

Layer卷积核

大小

Kernel sizeExp Size 输出通

道数

Output channelSE注意

力机制

SE attention非线性

激活函数

Nonlinearities步距

Stride6402×3 conv2 d 3×3 - 16 × HS 2 1122×16 bneck 3×3 16 16 √ RE 2 562×16 bneck 3×3 72 24 × RE 2 282×24 bneck 3×3 88 24 × RE 1 282×24 bneck 5×5 96 40 √ HS 2 142×40 bneck 5×5 240 40 √ HS 1 142×40 bneck 5×5 240 40 √ HS 1 142×40 bneck 5×5 120 48 √ HS 1 142×48 bneck 5×5 144 48 √ HS 1 142×48 bneck 5×5 288 96 √ HS 2 72×96 bneck 5×5 576 96 √ HS 1 72×96 bneck 5×5 576 96 √ HS 1 72×96 conv2 d 1×1 - 576 √ HS 1 72×576 Pool 7×7 - - × - 1 12×576 conv2 d,NBN 1×1 - 1024 × HS 1 12× 1024 conv2 d,NBN 1×1 - k × - 1 注:Layer中的bneck表示MobileNetV3中的bneck结构,NBN表示不使用BN;Exp size表示bneck中的第一层1×1卷积升维之后的维度大小(第一个bneck没有1×1卷积升维操作);Output channel中的 k 表示bneck最终输出的channel个数;SE attention中的“×”表示不使用SE注意力机制;“√”表示使用SE注意力机制;Nonlinearities中的HS表示HardSwish,RE表示ReLu;stride表示步长(stride=2,长宽变为原来一半)。 Note: The bneck in the layer represents the unique bneck structure in MobileNetV3, as shown in Figure 4, and the NBN indicates that BN is not used. Exp size indicates the first layer of 1×1 convolution in bneck, and how much the dimension is ascended to (the first bneck does not have a 1×1 convolutional dimension upscaling operation); k in the output channel indicates the number of channels that bneck outputs in the end. The "×" in SE attention means that the SE attention mechanism is not used; "√" indicates the use of the SE attention mechanism; HS in Nonlinearities means HardSwish, RE means ReLu; stride represents the step size (stride = 2, the length and width become half). 2.2.2 增加注意力机制

为了提升YOLOv8n模型在蓝莓特征提取方面的性能,本研究在主干网络的不同层级中嵌入了卷积注意力机制模块。

卷积注意力机制模块是一种结合了空间(spatial)和通道(channel)的注意力机制模块[18]。CBAM的轻量化程度高,内部无大量卷积结构,只有少量池化层和特征融合操作,这种结构避免了卷积运算带来的大量计算,使得其模块复杂度低,计算量小。此外,CBAM因其简洁而灵活的结构,展现出了较强的通用性,可广泛应用于不同的神经网络架构中。

蓝莓特征图在CBAM中的运算流程如图5所示,具体过程为:先分别对蓝莓特征图进行全局最大池化(MaxPool)和全局平均池化(AvgPool),对特征映射基于两个维度压缩,获得两张不同维度的特征描述。

池化后的特征图共用一个多层感知器网络,先通过1×1卷积降维再1×1卷积升维。将两张蓝莓特征图叠加layers.add(),经过sigmoid激活函数归一化特征图的每个通道的权重。将归一化后的权重和输入特征图相乘。然后对通道注意力机制的输出特征图进行空间域的处理。首先,特征图分别经过基于通道维度的最大池化和平均池化,将输出的两张特征图在通道维度堆叠 layers.concatenate()。然后使用1×1卷积调整通道数,最后经过sigmoid函数归一化权重。将归一化权重和输入特征图相乘。输入特征图经过通道注意力机制,将权重和输入特征图相乘后再送入空间注意力机制,将归一化权重和空间注意力机制的输入特征图相乘,得到最终的特征图。

2.2.3 优选损失函数

传统的目标检测损失函数(GIoU[19]、ICIoU[20]和YOLOv8采用的CIoU[21]等)主要关注预测框和真实框的距离、重叠区域和纵横比等边界框回归指标。然而,并没有考虑所需真实框与预测框之间不匹配的方向。这种不足导致收敛速度较慢且效率较低,因为预测框可能在训练过程中位置不稳定并最终产生更差的模型。针对以上问题,本研究采用SIoU损失函数替换原损失函数。SIoU[22-23]包含4个损失,具体为角度损失、距离损失、形状损失和交并比损失。

1)角度损失为模型输出的角度与真实角度之间的差异,如图6a所示。具体计算如下所示。

![]() 图 6 角度损失和距离损失示意图注:B为预测边界框,BGT为真实边界框,图a以真实框中心点和预测框中心点作为对角顶点形成一个矩形,cw和ch为矩形的宽度和高度,σ为矩形的对角线长度,α为σ与cw形成的角度度数,β为σ与ch形成的角度度数;图b中cwb和chb为预测框与真实边界框的最小外接矩形的宽度和高度。Figure 6. Schematic diagram of angular loss and distance lossNote: B is the prediction bounding box, BGT is the real bounding box, Fig. a takes the center point of the real box and the center point of the prediction box as diagonal vertices to form a rectangular rectangle, the cw and ch are the width and height of the rectangle, the σ is the diagonal length of the rectangle, the α is the degree of angle formed by the σ and the cw, and the β is the degree of angle formed by the σ and the ch; In Figure b, cwb and chb are the widths and heights of the minimum enclosed rectangle between the prediction box and the real bounding box.

图 6 角度损失和距离损失示意图注:B为预测边界框,BGT为真实边界框,图a以真实框中心点和预测框中心点作为对角顶点形成一个矩形,cw和ch为矩形的宽度和高度,σ为矩形的对角线长度,α为σ与cw形成的角度度数,β为σ与ch形成的角度度数;图b中cwb和chb为预测框与真实边界框的最小外接矩形的宽度和高度。Figure 6. Schematic diagram of angular loss and distance lossNote: B is the prediction bounding box, BGT is the real bounding box, Fig. a takes the center point of the real box and the center point of the prediction box as diagonal vertices to form a rectangular rectangle, the cw and ch are the width and height of the rectangle, the σ is the diagonal length of the rectangle, the α is the degree of angle formed by the σ and the cw, and the β is the degree of angle formed by the σ and the ch; In Figure b, cwb and chb are the widths and heights of the minimum enclosed rectangle between the prediction box and the real bounding box.Λ=1−2sin2(arcsin(x)−π4) (1) x=chσ=sin(α) (2) σ=√(bgtcx−bcx)2+(bgtcy−bcy)2 (3) ch=max (4) 式中Λ表示角度损失,事实上 \mathrm{s}\mathrm{i}\mathrm{n}\left(\alpha \right) 为直角三角形的对边比斜边。( {b}_{{c}_{x}}^{gt} , {b}_{{c}_{y}}^{gt} )为真实框中心坐标,( {b}_{{c}_{x}} , {b}_{{c}_{y}} )为预测框中心点坐标。

2)距离损失为模型输出位置与真实位置之间的距离,如图6b所示,具体计算如下所示。

\mathit{ \Delta }= 2 -\rm{e} ^{ - \gamma {p_x}} - \rm{e}^{ - \gamma {p_y}} (5) {\rho _x} = {\left( {\frac{{b_{{c_x}}^{gt} - {b_{{c_x}}}}}{{{c_{wb}}}}} \right)^2},{\rho _y} = {\left( {\frac{{b_{{c_y}}^{gt} - {b_{{c_y}}}}}{{{c_{hb}}}}} \right)^2},\gamma = 2 - \mathit{\Lambda} (6) 式中Δ为距离损失,cwb、chb为真实框和预测框最小外接矩形的宽和高。

3)形状损失为模型输出的目标区域与真实目标的形状相似性,具体计算如下所示:

\mathit{\Omega} = \sum\limits_{t = w,h} {{{\left( {1 - {e^{ - {\omega _t}}}} \right)}^\theta }} (7) {\omega _w} = \frac{{\left| {w - {w_{gt}}} \right|}}{{\max \left( {w,{w_{gt}}} \right)}} (8) {\omega _h} = \frac{{\left| {h - {h_{^{gt}}}} \right|}}{{\max \left( {h,{h_{^{gt}}}} \right)}} (9) 式中Ω表示形状损失,w、h预测框的宽和高,wgt、hgt为真实框的宽和高,θ控制对形状损失的关注程度。

4)交并比IoU为模型输出目标框与真实目标框这两个框的交集与并集之间的比值,具体计算如下所示。

\rm{IoU}=\frac{交集A}{并集B} (10) 综上所述,最终SIoU的边框损失函数Lbox定义如下所示。

{L_{box}} = 1 - \rm{IoU} + \frac{{\mathit{\Delta} +\mathit{ \Omega} }}{2} (11) 2.3 训练环境与方法

本研究训练环境如下:CPU为I712700f 2.10 GHz,GPU为RTX

3080 12G,内存为32G,Pytorch版本为1.10.0,Python版本为3.8.0。本研究训练采用随机梯度下降法(stochastic gradient descent,SGD)[24]优化网络参数,SGD优化器的初始学习率(learning rate)设置为0.01,动量(momentum)设为0.923,权值衰减为0.0003 ,共训练150轮。为了准确评估蓝莓成熟度模型的性能。本研究采用准确率(precision,P)、召回率(recall,R)、平均精度均值mAP、检测速度作为蓝莓成熟度分级模型的评价指标[25]。

在评估检测速度时,采用的标准是蓝莓模型识别单张蓝莓图像所需的时间,单位为 ms。

3. 结果与分析

3.1 特征提取网络轻量化改进结果分析

本小节旨在对2.2节所提出的轻量化改进方法进行验证和分析。考虑到当前模型的计算量庞大、参数众多,这不仅占用大量的内存空间,还导致运行效率低下,这些因素均限制了模型在边缘设备上的有效应用部署。为使蓝莓成熟度检测模型能满足边缘设备应用的需要,达到实时检测的要求,本研究基于YOLOv8进行了轻量化改进,并开展了相关试验,以YOLOv8为基础模型,分别使用MobileNetV3、VanillaNet[26]、shufflenet[27]作为YOLOv8的主干网络,并对这4个模型的参数量和检测速度进行了对比,对比结果如表2所示,检测结果如图7所示。

表 2 不同轻量化改进试验结果Table 2. Experimental results of different lightweight improvements模型Models 参数量

Parameters/M检测速度

Detection speed/msYOLOv8 7.86 11.06 YOLOv8-MobileNetV3 7.01 6.89 YOLOv8-Shufflenet 7.06 6.95 YOLOv8-Vanillanet 6.82 6.84 从图7a可知,YOLOv8原模型存在漏检和误识别情况,图片最下方的1个和中间的2个蓝莓没有检测到,而且图片中间的1个欠成熟蓝莓误识别为未成熟蓝莓。从图7b可知,将YOLOv8模型的主干网络更改为ShuffleNet,该算法在多目标图像中存在漏检误识别情况,最下面的蓝莓和中间的2个蓝莓没有被检测到,中间和左边的2个欠成熟蓝莓误识别为未成熟蓝莓,且部分检测框并未完全覆盖整个蓝莓果实,YOLOv8-ShuffleNet的参数量为7.06 M;从图7c可知,将YOLOv8模型的主干网络更改为VanillaNet,该算法在多目标图像中存在漏检和误识别情况,图片最下方的1个和中间的1个蓝莓没有检测到,左边的一个欠成熟蓝莓误识别为未成熟蓝莓,YOLOv8-VanillaNet的参数量为6.82 M;从图7d可知,将YOLOv8模型的主干网络更改为MobileNetV3,该算法在多目标图像中针对蓝莓果实相互遮挡、逆光等情况识别效果都较好,无漏检情况,YOLOv8-MobileNetV3的参数量为7.01 M。所以本研究选取MobileNetV3作为YOLOv8的主干网络。

3.2 不同注意力机制算法对比

以YOLOv8为基础模型,在其主干网络DarkNet_53上面引入不同的注意力机制,以有助于模型更有效地提取关键特征,从而提高目标检测框的准确性和精确度。为了更加直观地展示不同注意力机制对蓝莓成熟度特征的影响,本研究采用Grad-CAM[28]中类激活热力图分别对模型的3个输出层进行可视化分析,通过展示类激活热力图,可提高模型的可解释性,试验对比结果如图8所示。图中红颜色鲜亮区域面积越大,表示预测输出关注度越高。

由图8可知,主干网络DarkNet_53引入注意力机制MHSA[29]、ShuffleAttention[30]和SE[31]时,网络对密集蓝莓簇的信息捕获能力不强,尤其是ShuffleAttention注意力机制,关注的信息非常少。而YOLOv8-CBAM注意力机制对蓝莓成熟度特征的关注程度最高,对蓝莓目标的空间位置把握得更加精准,能够更快地定位到感兴趣目标。所以本研究采用CBAM注意力机制检测蓝莓的成熟度。

3.3 优选损失函数结果分析

由于YOLOv8模型原本的CIoU目标检测损失函数存在收敛速度较慢、效率较低的问题,为了解决此问题,本研究将YOLOv8原模型的CIoU分别替换为EIoU[32]、GIoU与SIoU,并将这几种改进的效果进行对比,然后选出效果最好的改进方法。试验对比结果如图9所示。

从图9a可知,原YOLOv8模型存在漏检和误识别情况。从图9b可知,将YOLOv8模型的边界框损失函数调整为EIoU后,模型也出现了漏检和误识别情况,并且在多目标图像的场景下,出现了重复识别的情况,如图片下方的2个蓝莓,就被多个检测框框中。

从图9c可知,将YOLOv8模型的边界框损失函数调整为GIoU,该算法依然存在漏检和误识别情况,有5个蓝莓漏检,2个成熟蓝莓被误识别为欠成熟蓝莓。从图9d可知,将YOLOv8模型的边界框损失函数调整为SIoU,该算法在多目标图像中,针对蓝莓果实遮挡、枝叶遮挡等情况识别效果都较好。

综上所述,将YOLOv8原模型的CIoU替换为SIoU的效果较好。原因可能是SIoU优化预测框与真实框之间的匹配度,使其更接近,从而加快收敛速度,提高效率。

3.4 改进模型消融试验

为验证本研究提出的MSC-YOLOv8模型性能的提升效果,将MSC-YOLOv8和YOLOv8逐步进行对比,以验证每步改进的有效性,对比结果如表3所示。

表 3 模型消融试验结果Table 3. Model ablation experimental resultsMobileNetV3 SIoU CBAM 召回率

Recall R/%平均精度 Average accuracy Ac/% 平均精度均值

Mean of average accuracy mAP/%检测速度

Detection speed/ms参数量

Parameters/M未成熟

Immature欠成熟

Undermature成熟

Mature过成熟

Overmature× × × 93.6 93.8 93.1 94.5 94.2 93.9 11.06 7.86 √ × √ 94.2 96.1 94.0 94.9 97.7 95.6 7.13 7.35 × √ × 95.7 94.3 92.1 96.2 95.4 94.9 10.9 7.89 √ × × 90.7 92.7 93.5 92.3 93.1 92.9 6.89 7.01 × × √ 94.3 94.1 93.3 94.9 94.5 94.2 11.35 7.92 √ √ × 94.1 96.6 95.4 93.1 96.5 95.4 6.97 7.24 × √ √ 93.3 94.7 94.6 93.4 94.8 94.4 11.23 7.95 √ √ √ 97.3 97.1 98.3 97.9 98.1 97.8 7.09 7.11 注:“×”表示未使用此改进策略;“√”表示使用此改进策略。 Note: "×" means that this improvement strategy is not being used; "√" indicates that this improvement strategy is used. 由表3可知,采用原YOLOv8模型,对蓝莓果实成熟度分类识别的mAP为93.9%。在原有的YOLOv8基础上将主干特征提取网络替换成了轻量型网络MobileNetV3,检测速度为6.89 ms,比原YOLOv8减少4.17 ms,说明对YOLOv8模型进行的轻量化改进有显著效果。将YOLOv8主干特征提取网络替换成轻量型网络MobileNetV3,并在主干网络的第4、第6和第9层嵌入了CBAM注意力机制,mAP较原YOLOv8模型增加1.7个百分点,较仅将主干网络替换为MobileNetV3增加2.7个百分点,说明CBAM可以有效抑制背景因素,更多的关注蓝莓特征。将原模型主干网络替换为轻量型MobileNetV3结构,引入CBAM注意力机制,并将边界框损失函数替换为SIoU,mAP为97.8%,为消融试验中最高,较将原模型主干网络替换为轻量型MobileNetV3结构且引入CBAM注意力机制增加2.2个百分点。

综上所述,可以表明本研究对YOLOv8模型的改进有效。消融试验中,将YOLOv8网络边框回归损失函数替换为SIoU并添加CBAM时,其召回率及平均精度均小于单独加入SIoU,原因可能是:同时添加SIoU和CBAM可能需要更加细致的参数调整。如果参数设置不当,可能会导致模型无法充分利用这两个组件的优势,甚至产生冲突。

3.5 不同模型对比

本研究选取单阶段目标检测模型YOLOv8、SSD[33-34]、CenterNet[35]进行对比。其各项性能指标对比结果如表4所示。

表 4 不同模型性能指标对比Table 4. Comparison of performance indicators of different models模型名称

Model参数量

Parameters/M检测速度

Detection speed/msmAP/% R/% MSC-YOLOv8 7.11 7.09 97.8 97.3 YOLOv8 7.86 11.06 93.9 93.6 SSD 8.31 11.35 93.2 92.7 CenterNet 7.92 10.03 96.7 96.1 由表4数据可知,MSC-YOLOv8相较于YOLOv8、SSD和CenterNet,其综合性能最好,其参数量为7.11M,检测时间为7.09 ms,mAP为97.8%,mAP分别提升了3.9、4.6、1.1个百分点,检测时间分别减少了3.97、4.26、2.94 ms。

在完成模型训练后,本研究计划将MSC-YOLOv8模型部署于边缘设备。经过测试,MSC-YOLOv8模型检测速度达到了141帧/s,这一性能显著超出了实时性检测通常要求的30帧/s,因此,其满足实时监测的需求,具备在实际生产环境中应用的潜力。这4个模型在不同环境下的检测效果对比如图10所示。

由图10可知MSC-YOLOv8模型展现出了最高的准确率,并且在所有测试样本中均未出现漏检现象。而在检测重叠的蓝莓果实时,YOLOv8和SSD模型则出现了重复识别的情况;在逆光环境下检测的4幅图中可以看出,MSC-YOLOv8模型无漏检情况,最低置信度为0.92,最高置信度为0.96,SSD模型出现了漏检的情况,而YOLOv8和CenterNet模型的置信度较低;在无过多遮挡环境下检测的4幅图中,MSC-YOLOv8模型检测效果最好;在检测枝叶遮挡的蓝莓果实时,YOLOv8、SSD和CenterNet模型出现了不同程度的漏检情况。

4. 结 论

本研究基于YOLOv8提出了一种改进的轻量化网络模型MSC-YOLOv8,用于蓝莓果实成熟度检测。改进方法为将原模型主干网络替换为轻量型MobileNetV3结构,在主干网络中引入CBAM注意力机制,将原模型的边界框损失函数替换为SIoU。主要结论如下:

1)通过对3种改进方法试验结果对比分析得出,采用MobileNetV3作为主干网络显著提升了模型的实时性,模型参数量达到7.01M;引入CBAM注意力机制有效增强了模型对蓝莓特征的关注度并抑制了背景干扰,而边界框损失函数采用SIoU则进一步减小了漏检机会。这些改进措施有效使蓝莓成熟度检测模型能满足边缘设备应用的需要,可达到实时监测的要求。

2)8组消融试验验证了这些改进的有效性,MSC-YOLOv8在检测速度和平均精度均值(mAP)上均展现出显著优势,单张图片检测速度提升至7.09 ms,比YOLOv8减少3.97 ms,mAP达到97.8%,比YOLOv8的mAP增加3.9个百分点。试验结果表明MSC-YOLOv8 各模块的有效性。

3)改进的MSC-YOLOv8模型在蓝莓数据集上取得了较优的结果,与YOLOv8、SSD、CenterNet等主流模型相比,MSC-YOLOv8在mAP上分别实现了3.9、4.6、1.1个百分点的提升,模型检测速度达到了141帧/s,同时在视觉检测效果上也表现优异。因此,本研究不仅为田间复杂环境下蓝莓果实成熟度的智能检测提供了高效准确的解决方案,也为农产品机器人智能采摘技术的发展奠定了坚实基础。

-

表 1 试验相关硬件配置和模型参数

Table 1 Experiment-related hardware configuration and model parameters

名称

Name配置

Configuration名称

Name取值

ValueGPU NVIDIA Tesla V100 批大小

(Batch size)32 CPU Gold 6226R 2.90 GHz 16c 初始学习率

(Initial learning rate)0.01 CUDA Version 11.6 训练次数

(Epoch)200 表 2 不同YOLO系列模型检测结果

Table 2 Different YOLO series model target detection results

模型

Model模型大小

Model size/

M参数量

Parameters/

M各类平均

平衡分数

F1-Score /%单类别平衡分数

F1-Score of single category/%Basket Anchor YOLOv3 120.5 61.5 84.9 85.1 84.7 YOLOv5s 14.4 7.0 91.7 92.1 91.3 YOLOv7 71.3 36.5 95.1 95.9 94.2 表 3 目标检测模型结果对比

Table 3 Comparison of target detection models results

模型

Model分类

Class精确率

Precision /%召回率

Recall /%F1平衡分数

F1-Score /%YOLOv7 All 95.3 94.9 95.1 Basket 94.5 97.4 95.9 Anchor 96.1 92.4 94.2 YOLOv7-MO All 97.3 96.0 96.6 Basket 96.9 97.7 97.3 Anchor 97.6 94.3 95.9 表 4 模型参数比较

Table 4 Comparison of model parameters

模型

Models模型大小

Model size/M参数量

Parameters/M浮点运算数

FLOPs/GYOLOv7 71.3 36.5 103.3 YOLOv7-MO 64.0 32.6 39.7 表 5 不同模型检测效果对比

Table 5 Comparison of detection results of different models

模型

Model模型

大小

Model size/M参数量

Parameters/

M精确率

Precision/

%召回率

Recall/

%平衡分

数 F1-

Score/%平均精

度均值

mAP/

%平均精度

AP/%Basket anchor Faster RCNN

(VGG)120.5 138.2 97.9 98.1 98.0 77.4 78.1 76.7 YOLOv3 120.5 61.5 83.8 86.1 84.9 86.1 86.4 85.8 YOLOv5s 14.4 7.0 91.1 92.3 91.7 97.7 97.8 97.6 YOLOv7 71.3 36.5 95.3 94.9 95.1 98.1 98.1 98.2 YOLOv7-MO 64.0 32.6 97.3 96.0 96.6 98.8 98.9 98.8 表 6 渔获毛虾筐数计数试验结果统计

Table 6 Statistics on the results of the basket counting experiment

视频序号

Video serial

number人工计数

Manual counting

Nb模型计数

Model count

Sb差值

Difference value

|{S}_{b}-{N}_{b}|01 16 14 2 02 7 6 1 03 4 3 1 04 6 5 1 05 4 4 0 06 4 4 0 07 5 6 1 08 4 4 0 09 2 4 2 10 5 5 0 11 10 11 1 12 11 8 3 13 7 4 3 14 5 5 0 15 10 5 5 总计

Sum100 88 20 准确率

ACP80.0% 表 7 下网数量计数试验结果统计

Table 7 Statistics on the results of the net counting experiment

视频序号

Video

Serial

Number人工计数

下网数量

Manual counting

of the number

of nets Nn模型计数

anchor数量

Modelcount number

ofanchors Sa模型计数

下网数量

Model count

number of nets Sn差值

Difference

value

|{S}_{n}-{N}_{n}|01 32 34 33 1 02 32 36 35 3 03 34 36 35 1 04 34 36 35 1 05 34 37 36 2 06 34 37 36 2 07 34 35 34 0 08 34 35 34 0 09 34 38 37 3 10 34 36 35 1 总计

Sum336 360 350 14 准确率

ACP95.8% -

[1] 农业农村部. 农业农村部发布《通告》规定2021年伏休期间特殊经济品种专项捕捞许可[J]. 水产科技情报,2021,48(03):177. [2] GRAY J S. Marine biodiversity:Patterns, threats and conservation needs[J]. Biodiversity & Conservation, 1997, 6(1):153-175. GRAY J S. Marine biodiversity: Patterns, threats and conservation needs[J]. Biodiversity & Conservation, 1997, 6(1): 153-175.

[3] SCHEFFER M, CARPENTER S, de YOUNG B. Cascading effects of overfishing marine systems[J]. Trends in Ecology & Evolution, 2005, 20(11):579-581. SCHEFFER M, CARPENTER S, de YOUNG B. Cascading effects of overfishing marine systems[J]. Trends in Ecology & Evolution, 2005, 20(11): 579-581.

[4] GERRITSEN H, LORDAN C. Integrating vessel monitoring systems (VMS) data with daily catch data from logbooks to explore the spatial distribution of catch and effort at high resolution[J]. ICES Journal of Marine Science, 2011, 68(1):245-252 GERRITSEN H, LORDAN C. Integrating vessel monitoring systems (VMS) data with daily catch data from logbooks to explore the spatial distribution of catch and effort at high resolution [J]. ICES Journal of Marine Science, 2011, 68(1): 245-252

[5] 张佳泽,张胜茂,王书献,等. 基于3-2D融和模型的毛虾捕捞渔船行为识别[J]. 南方水产科学,2022,18(4):126-135. ZHANG Jiaze, ZHANG Shengmao, WANG Shuxian, et al. Recognition of Acetes chinensis fishing vessel based on 3-2D integration model behavior[J]. South China Fisheries Science, 2022, 18(4): 126-135. (in Chinese with English abstract ZHANG Jiaze, ZHANG Shengmao, WANG Shuxian, et al. Recognition of Acetes chinensis fishing vessel based on 3-2D integration model behavior [J]. South China Fisheries Science, 2022, 18(04): 126-135.

[6] FERREIRA J C, MARTINS A L. Edge computing approach for vessel monitoring system[J]. Energies, 2019, 12(16):3087. doi: 10.3390/en12163087 FERREIRA J C, MARTINS A L. Edge computing approach for vessel monitoring system[J]. Energies, 2019, 12(16): 3087. doi: 10.3390/en12163087

[7] WANG S, ZHANG S, LIU Y, et al. Recognition on the working status of Acetes chinensis quota fishing vessels based on a 3D convolutional neural network[J]. Fisheries Research, 2022, 248:106226. doi: 10.1016/j.fishres.2022.106226 WANG S, ZHANG S, LIU Y, et al. Recognition on the working status of Acetes chinensis quota fishing vessels based on a 3D convolutional neural network[J]. Fisheries Research, 2022, 248: 106226. doi: 10.1016/j.fishres.2022.106226

[8] 李国东,仲霞铭,熊瑛,等. 基于北斗船位数据的渔业信息解译与应用研究-以中国毛虾限额捕捞管理为例[J]. 海洋与湖沼,2021,52(3):746-753. LI Guodong, ZHONG Xiaming, XIONG Ying, et al. Interpretation and application of fishery information based on beidou position data: A case study of tacs pilot project of acetes chinensis[J]. Oceanologia et Limnologia Sinica, 2021, 52(3): 746-753. (in Chinese with English abstract doi: 10.11693/hyhz20201000288 LI Guodong, ZHONG Xiaming, XIONG Ying, et al. Interpretation and application of fishery information based on beidou position data: a case study of tacs pilot project of acetes chinensis [J]. Oceanologia et Limnologia Sinica, 2021, 52(3): 746-753. doi: 10.11693/hyhz20201000288

[9] KALAISELVI V K G, RANJANI J, SM V K. Illegal fishing detection using neural network[C]//2022 International Conference on Communication, Computing and Internet of Things (IC3IoT). Chennai, India:IEEE, 2022:1-4. KALAISELVI V K G, RANJANI J, SM V K. Illegal fishing detection using neural network[C]//2022 International Conference on Communication, Computing and Internet of Things (IC3IoT). Chennai, India: IEEE, 2022: 1-4.

[10] 刘洋,张胜茂,王斐,等. 海洋捕捞鱼类BigH神经网络分类模型设计与实现[J]. 工业控制计算机,2021,34(6):18-20. LIU Yang, ZHANG Shengmao, WANG Fei, et al. Automatic transfer learning and manual neural network for marine fish classification Industrial[J]. Control Computer, 2021, 34(6): 18-20. (in Chinese with English abstract doi: 10.3969/j.issn.1001-182X.2021.06.007 LIU Yang, ZHANG Shengmao, WANG Fei, et al. Automatic transfer learning and manual neural network for marine fish classification Industrial [J]. Control Computer, 2021, 34(6): 18-20. doi: 10.3969/j.issn.1001-182X.2021.06.007

[11] 张胜茂,孙永文,樊伟,等. 面向海洋渔业捕捞生产的深度学习方法应用研究进展[J]. 大连海洋大学学报,2022,37(4):683-695. ZHANG Shengmao, SUN Yongwen, FAN Wei, et al. Research progress in the application of deep learning methods for marine fishery production: A review[J]. Journal of Dalian Ocean University, 2022, 37(4): 683-695. (in Chinese with English abstract doi: 10.16535/j.cnki.dlhyxb.2022-099 ZHANG Shengmao, SUN Yongwen, FAN Wei, et al. Research progress in the application of deep learning methods for marine fishery production: a review [J]. Journal of Dalian Ocean University, 2022, 37(04): 683-695. doi: 10.16535/j.cnki.dlhyxb.2022-099

[12] 王书献,张胜茂,唐峰华,等. CNN-LSTM在日本鲭捕捞渔船行为提取中的应用[J]. 农业工程学报,2022,38(7):200-209. WANG Shuxian, ZAHNG Shengmao, TANG Fenghua, et al. Extracting the behavior of Scomber japonicus fishing vessel using CNN-LSTM[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2022, 38(7): 200-209. (in Chinese with English abstract doi: 10.11975/j.issn.1002-6819.2022.07.022 WANG Shuxian, ZAHNG Shengmao, TANG Fenghua, et al. Extracting the behavior of Scomber japonicus fishing vessel using CNN-LSTM [J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2022, 38(7): 200-209. doi: 10.11975/j.issn.1002-6819.2022.07.022

[13] 梁建胜,温贺平. 基于深度学习的视频关键帧提取与视频检索[J]. 控制工程,2019,26(5):965-070. LIANG Jiansheng, WEN Heping. Key frame abstraction and retrieval of videos based on deep learning[J]. Control Engineering of China, 2019, 26(5): 965-070. (in Chinese with English abstract LIANG Jiansheng, WEN Heping. Key frame abstraction and retrieval of videos based on deep learning [J]. Control Engineering of China, 2019, 26(5): 965-070.

[14] 陈道富. 基于深度学习的视频目标检索技术研究 [D]. 成都: 电子科技大学, 2019. CHENG Daofu. Research on Video Object Retrieval Technology Based on Deep Learning [D]. Chengdu: University of Electronic Science and Technology of China, 2019.

[15] 蒋梦迪,程江华,陈明辉,等. 视频和图像文本提取方法综述[J]. 计算机科学,2017,44(S2):8-18. JIANG Mengdi, CHENG Jianghua, CHEN Minghui, et al. Text extraction in video and images: A Review[J]. Computer Science, 2017, 44(S2): 8-18. (in Chinese with English abstract JIANG Mengdi, CHENG Jianghua, CHEN Minghui, et al. Text extraction in video and images: A Review [J]. Computer Science, 2017, 44(S2): 8-18.

[16] 杨非凡,廖兰芳. 一种基于深度学习的目标检测提取视频图像关键帧的方法[J]. 电脑知识与技术,2018,14(36):201-3. YANG Feifan, LIAO Lanfang. A method of extracting key frames from video images based on deep learning for target detection[J]. Computer Knowledge and Technology, 2018, 14(36): 201-3. (in Chinese with English abstract YANG Feifan, LIAO Lanfang. A method of extracting key frames from video images based on deep learning for target detection [J]. Computer Knowledge and Technology, 2018, 14(36): 201-3.

[17] 李秀智,张冉,贾松敏. 面向助老行为识别的三维卷积神经网络设计[J]. 北京工业大学学报,2021,47(6):589-597. LI Xiuzhi, ZHANG Ran, JIA Songmin. Design of 3 d convolutional neural network for action Recognition for helping the aged[J]. Journal of Beijing University of Technology, 2021, 47(6): 589-597. (in Chinese with English abstract LI Xiuzhi, ZHANG Ran, JIA Songmin. Design of 3 d convolutional neural network for action Recognition for helping the aged [J]. Journal of Beijing University of Technology, 2021, 47(6): 589-597.

[18] 裴凯洋,张胜茂,樊伟,等. 基于计算机视觉的鱼类视频跟踪技术应用研究进展[J]. 海洋渔业,2022,44(5):640-647. PEI Kaiyang, ZHANG Shengmao, FAN Wei, et al. Research progress of fish video tracking application based on computer vision[J]. Marine Fisheries, 2022, 44(5): 640-647. (in Chinese with English abstract PEI Kaiyang, ZHANG Shengmao, FAN Wei, et al. Research progress of fish video tracking application based on computer vision [J]. Marine Fisheries, 2022, 44(5): 640-647.

[19] 宋敏毓,陈力荣,梁建安,等. 轻量化改进网络的实时光纤端面缺陷检测模型[J]. 激光与光电子学进展,2022,59(24):201-211. SONG Yumin, CHEN Lirong, LIANG Jian'an, et al. Real-time optical fiber end surface defects detection model based on lightweight improved network[J]. Laser & Optoelectronics Progress, 2022, 59(24): 201-211. (in Chinese with English abstract SONG Yumin, CHEN Lirong, LIANG Jianan, et al. Real-time optical fiber end surface defects detection model based on lightweight improved network [J]. Laser & Optoelectronics Progress, 2022, 59(24): 201-211.

[20] GIRSHICK R. Fast r-cnn[C]. Proceedings of the proceedings of the IEEE international conference on computer vision, F, 2015 GIRSHICK R. Fast r-cnn [C]//Proceedings of the IEEE International Conference on Computer Vision, Santiago, 2015

[21] REN S, HE K, GIRSHICK R, et al. Faster r-cnn:Towards real-time object detection with region proposal networks[J]. Advances in Neural Information Processing Systems, 2015, 28:91-99. REN S, HE K, GIRSHICK R, et al. Faster r-cnn: Towards real-time object detection with region proposal networks[J]. Advances in Neural Information Processing Systems, 2015, 28: 91-99.

[22] GIRSHICJ R, DONAHUE J, DARRELL T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Columbus, OH:IEEE, 2014:580-587. GIRSHICJ R, DONAHUE J, DARRELL T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Columbus, OH: IEEE , 2014: 580-587.

[23] 王书献,张胜茂,朱文斌,等. 基于深度学习YOLOV5网络模型的金枪鱼延绳钓电子监控系统目标检测应用[J]. 大连海洋大学学报,2021,36(5):842-850. WANG Shuxian, ZAHNG Shengmao, ZHU Wenbin, et al. Application of an electronic monitoring system for video target detection in tuna longline fishing based on YOLOV5 deep learning model[J]. Journal of Dalian Ocean University, 2021, 36(5): 842-850. (in Chinese with English abstract doi: 10.16535/j.cnki.dlhyxb.2020-333 WANG Shuxian, ZAHNG Shengmao, ZHU Wenbin, et al. Application of an electronic monitoring system for video target detection in tuna longline fishing based on YOLOV5 deep learning model [J]. Journal of Dalian Ocean University, 2021, 36(5): 842-850. doi: 10.16535/j.cnki.dlhyxb.2020-333

[24] WATSON J T, HAYNIE A C, SULLIVAN P J, et al. Vessel monitoring systems (VMS) reveal an increase in fishing efficiency following regulatory changes in a demersal longline fishery[J]. Fisheries Research, 2018, 207:85-94. doi: 10.1016/j.fishres.2018.06.006 WATSON J T, HAYNIE A C, SULLIVAN P J, et al. Vessel monitoring systems (VMS) reveal an increase in fishing efficiency following regulatory changes in a demersal longline fishery[J]. Fisheries Research, 2018, 207: 85-94. doi: 10.1016/j.fishres.2018.06.006

[25] 孙永文,张胜茂,蒋科技,等. 远洋渔船电子监控技术应用研究进展及展望[J]. 海洋渔业,2022,44(1):103-111. SUN Yongwen, ZHANG Shengmao, JIANG Keji, et al. Research progress of application status of electronic monitoring technology in ocean fishing vessels and prospects[J]. Marine Fisheries, 2022, 44(1): 103-111. (in Chinese with English abstract doi: 10.3969/j.issn.1004-2490.2022.01.010 SUN Yongwen, ZHANG Shengmao, JIANG Keji, et al. Research progress of application status of electronic monitoring technology in ocean fishing vessels and prospects [J]. Marine Fisheries, 2022, 44(01): 103-111. doi: 10.3969/j.issn.1004-2490.2022.01.010

[26] 张胜茂,樊伟,张衡,等. 远洋捕捞渔船电子监控视频文字信息提取[J]. 渔业信息与战略,2020,35(2):141-146. ZHANG Shengmao, FAN Wei, ZHANG Heng, et al. Text extraction from electronic monitoring video of ocean fishing vessels[J]. Fishery Information & Strategy, 2020, 35(2): 141-146. (in Chinese with English abstract doi: 10.13233/j.cnki.fishis.2020.02.008 ZHANG Shengmao, FAN Wei, ZHANG Heng, et al. Text extraction from electronic monitoring video of ocean fishing vessels [J]. Fishery Information & Strategy, 2020, 35(2): 141-146. doi: 10.13233/j.cnki.fishis.2020.02.008

[27] BEWLEY A, GE Z, OTT L, et al. Simple online and realtime tracking[C]//2016 IEEE International Conference on Image Processing (ICIP). Phoenix, AZ:IEEE, 2016:3464-3468. BEWLEY A, GE Z, OTT L, et al. Simple online and realtime tracking[C]//2016 IEEE International Conference on Image Processing (ICIP). Phoenix, AZ: IEEE, 2016: 3464-3468.

[28] WELCH G F. Kalman filter[J]. Computer Vision:A Reference Guide, 2020:1-3. WELCH G F. Kalman filter [J]. Computer Vision: A Reference Guide, 2020: 1-3.

[29] 赖见辉,王扬,罗甜甜,等. 基于YOLO_V3的侧视视频交通流量统计方法与验证[J]. 公路交通科技,2021,38(1):135-142. LAI Jianhui, WANG Yang, LUO Tiantian, et al. A YOLO_V3-based road-side video traffic volume counting method and verification[J]. Journal of Highway and Transportation Research and Development, 2021, 38(1): 135-142. (in Chinese with English abstract doi: 10.3969/j.issn.1002-0268.2021.01.017 LAI Jianhui, WANG Yang, LUO Tiantian, et al. A yolo_v3-based Road-side video traffic volume counting method and verification [J]. Journal of Highway and Transportation Research and Development, 2021, 38(1): 135-142. doi: 10.3969/j.issn.1002-0268.2021.01.017

[30] YAO P, WU K, LOU Y. Path planning for multiple unmanned surface vehicles using Glasius bio-inspired neural network with Hungarian algorithm[J]. IEEE Systems Journal, 2022:1-12. YAO P, WU K, LOU Y. Path planning for multiple unmanned surface vehicles using Glasius bio-inspired neural network with Hungarian algorithm[J]. IEEE Systems Journal, 2022: 1-12.

[31] 柳毅,佟明安. 匈牙利算法在多目标分配中的应用[J]. 火力与指挥控制,2002(4):34-37. LIU Yi, TONG Mingan. An application of hungarian algorithm to the multi-target assignment[J]. Fire Control & Command Control, 2002(4): 34-37. (in Chinese with English abstract doi: 10.3969/j.issn.1002-0640.2002.04.010 LIU yi, TONG Mingan. An application of hungarian algorithm to the multi-target assignment [J]. Fire Control & Command Control, 2002, (4): 34-37. doi: 10.3969/j.issn.1002-0640.2002.04.010

[32] 汤先峰,张胜茂,樊伟,等. 基于深度学习的刺网与拖网作业类型识别研究[J]. 海洋渔业,2020,42(2):233-244. TANG Xianfeng, ZHANG Shengmao, FAN Wei, et al. Fishing type identification of gill net and trawl net based on deep learning[J]. Marine Fisheries, 2020, 42(2): 233-244. (in Chinese with English abstract doi: 10.13233/j.cnki.mar.fish.2020.02.011 TANG Xianfeng, ZHANG Shengmao, FAN Wei, et al. Fishing type identification of gill net and trawl net based on deep learning [J]. Marine Fisheries, 2020, 42(2): 233-244. doi: 10.13233/j.cnki.mar.fish.2020.02.011

[33] 龚惟新,杨珍,李凯,等. 基于改进YOLOv5s的自然环境下猕猴桃花朵检测方法[J]. 农业工程学报,2023,39(6):177-185. GONG Weixin, YANG Zhen, LI Kai, et al. Detecting kiwi flowers in natural environments using an improved YOLOv5s[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2023, 39(6): 177-185. (in Chinese with English abstract GONG Weixin , YANG Zhen, LI Kai, HAO Wei, HE Zhi, DING Xinting, CUI yongjie. Detecting kiwi flowers in natural environments using an improved YOLOv5s[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2023, 39(06): 177-185.

[34] 段洁利,王昭锐,邹湘军,等. 采用改进YOLOv5的蕉穗识别及其底部果轴定位[J]. 农业工程学报,2022,38(19):122-130. DUAN Jieli, WANG Zhaorui, ZOU Xiangjun, et al. Recognition of bananas to locate bottom fruit axis using improved YOLOV5[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2022, 38(19): 122-130. (in Chinese with English abstract doi: 10.11975/j.issn.1002-6819.2022.19.014 DUAN Jieli, WANG Zhaorui, ZOU Xiangjun, YUAN Haotian, HUANG Guangsheng, YANG Zhou. Recognition of bananas to locate bottom fruit axis using improved YOLOV5[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2022, 38(19): 122-130. doi: 10.11975/j.issn.1002-6819.2022.19.014

[35] 尚钰莹,张倩如,宋怀波. 基于YOLOv5s的深度学习在自然场景苹果花朵检测中的应用[J]. 农业工程学报,2022,38(9):222-229. SHANG Yuying, ZHANG Qianru, SONG Huaibo. Application of deep learning using YOLOv5s to apple flower detection in natural scenes[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2022, 38(9): 222-229. (in Chinese with English abstract SHANG Yuying, ZHANG Qianru, SONG Huaibo. Application of deep learning using YOLOv5s to apple flower detection in natural scenes[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2022, 38(9): 222-229.

[36] 林森,刘美怡,陶志勇. 采用注意力机制与改进YOLOv5的水下珍品检测[J]. 农业工程学报,2021,37(18):307-314. LIN Sen, LIU Meiyi, TAO Zhiyong. Detection of underwater treasures using attention mechanism and improved YOLOv5[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2021, 37(18): 307-314. (in Chinese with English abstract LIN Sen, LIU Meiyi, TAO Zhiyong. Detection of underwater treasures using attention mechanism and improved YOLOv5 [J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2021, 37(18): 307-314.

[37] 王金鹏, 周佳良, 张跃跃, 等. 基于优选YOLOv7模型的采摘机器人多姿态火龙果检测系统[J/OL]. 农业工程学报: 1-8[2023-05-17]. http: //kns.cnki.net/kcms/detail/11.2047.S.20230418.0900.002.html WANG Jinpeng, ZHOU Jialiang, ZHANG Yueyue, et al. A multi-pose dragon fruit detection system for picking robot based on the optimal YOLOv7 model [J/OL]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE): 1-8[2023-05-17]. http://kns.cnki.net/kcms/detail/11.2047.S.20230418.0900.002.html

[38] 王小荣,许燕,周建平,等. 基于改进YOLOv7的复杂环境下红花采摘识别[J]. 农业工程学报,2023,39(6):169-176. WANG Xiaorong, XU Yan, ZHOU Jianping, et al. Safflower picking recognition in complex environments based on an improved YOLOv7[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2023, 39(6): 169-176. (in Chinese with English abstract WANG Xiaorong, XU Yan, ZHOU Jianping, CHEN Jinrong. Safflower picking recognition in complex environments based on an improved YOLOv7[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2023, 39(6): 169-176.

[39] LEE C Y, XIE S, GALLAGHER P, et al. Deeply-supervised nets[C]//Artificial Intelligence and Statistics. San Diego, CA:PMLR, 2015:562-570. LEE C Y, XIE S, GALLAGHER P, et al. Deeply-supervised nets[C]//Artificial Intelligence and Statistics. San Diego, CA: PMLR, 2015: 562-570.

[40] HE K, ZHANG X, REN S, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Seattle, WA:IEEE, 2016:770-778. HE K, ZHANG X, REN S, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Seattle, WA: IEEE, 2016: 770-778.

[41] DING X, ZHANG X, MA N, et al. Repvgg:Making vgg-style convnets great again[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Electr Network:IEEE COMP SOC, 2021:13733-13742. DING X, ZHANG X, MA N, et al. Repvgg: Making vgg-style convnets great again[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Electr Network: IEEE COMP SOC, 2021: 13733-13742.

[42] VASU P K A, GABRIEL J, ZHU J, et al. An improved one millisecond mobile backbone[J]. arXiv preprint arXiv:2206.04040, 2022. VASU P K A, GABRIEL J, ZHU J, et al. An improved one millisecond mobile backbone[EB/OL]. arXiv preprint arXiv: 2206.04040, [2022-06-08] http://doi.org/10.48550/arXiv.2206.04040.

[43] 吴必朗,柳春娜,姜付仁. 基于DeepSORT算法的鱼道过鱼种类识别和计数研究[J]. 水利水电技术(中英文),2022,53(9):152-162. WU Bilang, LIU Chunna, JIANG Furen. Species identification and counting of fishes in fishway based on deepsort algorithm[J]. Water Resources and Hydropower Engineering, 2022, 53(9): 152-162. (in Chinese with English abstract WU Bilang, LIU Chunna, JIANG Furen. Species identification and counting of fishes in fishway based on deepsort algorithm [J]. Water Resources and Hydropower Engineering, 2022, 53(9): 152-162.

[44] ZHANG J, ZHANG S, FAN W, et al. Research on target detection of Engraulis japonicus purse seine based on improved model of YOLOv5[J]. Frontiers in Marine Science, 2022:1400. ZHANG J, ZHANG S, FAN W, et al. Research on target detection of Engraulis japonicus purse seine based on improved model of YOLOv5[J]. Frontiers in Marine Science, 2022: 1400.

-

期刊类型引用(4)

1. 董适 ,赵国瑞 ,苟豪 ,文剑 ,林晨 . 基于改进RT-Detr的黄瓜果实选择性采摘识别方法. 农业工程学报. 2025(01): 212-220 .  本站查看

本站查看

2. 任锐,孙海霞,张淑娟,杨盛,景建平. 基于改进YOLOv8n的不同栽培模式下玉露香梨轻量化检测. 农业工程学报. 2025(05): 145-155 .  本站查看

本站查看

3. 师翊,王应宽,王菲,卿顺浩,赵龙,宇文星璨. MCBPnet:一种高效的轻量级青杏识别模型. 农业工程学报. 2025(05): 156-164 .  本站查看

本站查看

4. 李莹,刘梦莲,何自芬,娄杨伟. 基于改进YOLOv8s的柑橘果实成熟度检测. 农业工程学报. 2024(24): 157-164 .  本站查看

本站查看

其他类型引用(0)

下载:

下载: