Detecting Xinmei fruit under complex environments using improved YOlOv5s

-

摘要:

为解决新梅在树干树叶遮挡、果实重叠情况下难以准确检测的问题,该研究建立了新梅目标检测模型SFF-YOLOv5s。在真实果园环境下构建新梅数据集,以YOLOv5s模型作为基础网络,首先在Backbone骨干网络C3模块中引入CA(coordinate attention)注意力机制以增强模型对新梅关键特征信息的提取能力并减少模型的参数量;其次在Neck层中引入加权双向特征金字塔网络,增强模型不同特征层之间的融合能力,从而提高模型的平均精度均值;最后使用SIoU损失函数替换原模型中的CIoU损失函数提高模型的检测准确率。试验结果表明,SSF-YOLOv5s模型对新梅检测准确率为93.4%,召回率为92.9%,平均精度均值为97.7%,模型权重仅为13.6MB,单幅图像平均检测时间12.1ms,与Faster R-CNN、YOLOv3、YOLOv4、YOLOv5s、YOLOv7、YOLOv8s检测模型相比平均精度均值分别提升了3.6、6.8、13.1、0.6、0.4、0.5个百分点,能够满足果园复杂环境下对新梅进行实时检测的需求,为后续新梅采摘机器人的视觉感知环节提供了技术支持。

-

关键词:

- 图像处理;模型;新梅检测 /

- YOLOv5s /

- 注意力机制 /

- 双向特征金字塔 /

- 深度学习

Abstract:Xinmei is one of the European varieties with "paradise medicine fruit", because of its rich medicinal and health care and beauty functions. Today, Xinmei have been widely planted in Kashgar and Yili in Xinjiang Uygur autonomous region, China. Picking robots have also been used to harvest Xinmei in recent years. However, rapid and accurate visual detection is still challenging in the complex environment, such as overlap and occlusion. A large number of Xinmei fruits are blocked by leaves and trunks, due to the small target of Xinmei fruit and the dense branches and leaves. The key feature information of Xinmei cannot be detected for the expensive and high economic value. In addition, the more fruits overlapped, the larger the overlapping area among fruits was. In this study, a detection model was proposed for Xinmei in a complex environment using SFF-YOLOv5s. The dataset of Xinmei was constructed in the real orchard environment. The advanced YOLOv5s model was adopted as the network base. Firstly, the Coordinate Attention mechanism CA (Coordinate Attention) was introduced in the C3 module of the Backbone network, in order to extract the key feature information when the Xinmei was blocked by leaves. The number of parameters was reduced to embed on mobile devices. Secondly, the weighted bidirectional feature pyramid network was introduced into the Neck layer, in order to enhance the fusion among different feature layers of the model. The recognition of the model was promoted on the mutually occluding fruit. SIoU loss function was also used to replace the CIoU loss function in the original model, in order to accelerate the convergence speed for the high accuracy of the model. The test results showed that better performance was achieved, where the accuracy of the SFF-YOLOv5s model was 93.4%, the recall rate was 92.9%, the mean average precision (mAP) was 97.7%, the model weight was only 13.6MB, and the average detection time of a single image was 12.1ms. After the CA attention mechanism was added to the C3 module, the average accuracy of the improved model increased by 0.2 percentage points, while the number of parameters was reduced from 7.02 to 6.41M. With the weighted bidirectional feature pyramid network, the average accuracy of the model reached 97.6%, which was 0.5 percentage points higher than that of the original YOLOv5s. When SIoU was used as the loss function, the accuracy of the model was improved by 2 percentage points, compared with the original. Compared with the Faster R-CNN, YOLOv3, YOLOv4, YOLOv5s, YOLOv7, and YOLOv8s models, the average accuracy (mAP) was improved by 3.6, 6.8, 13.1, 0.6, 0.4 and 0.5 percentage points, respectively. The lowest computation and weight were achieved with the high detection speed. Therefore, the optimal performance of the SFF-YOLOv5s model can fully meet the requirements of real-time detection of Xinmei in the complex orchard environment. The finding can provide technical support to the visual perception of xinmei in picking robots.

-

0. 引 言

新梅成熟期短,采摘工作需在短时间内完成,否则会脱落造成严重的经济损失。目前新梅的采收仍然为农民手动采摘,需要大量的劳动力,并且采摘效率低、成本高。因此,自动化、智能化新梅采摘机器人的研发成为新梅采摘的发展趋势,如何在重叠、遮挡等复杂环境下对新梅进行快速、准确地视觉检测成为研发新梅采摘机器人亟需解决的关键问题[1]。

近年来,随着人工智能技术的飞速发展,基于深度学习进行目标检测成为当前研究的热点,其目标检测模型主要分为两大类,一类是基于候选区域的双阶段(Two-stage)目标检测模型,其主流的模型有RCNN[2]、Faster R-CNN[3]、Mask R-CNN[4]等。另一类是基于回归的单阶段(One-stage)目标检测模型,单阶段目标检测模型省略了候选区域生成步骤,可直接对特征进行提取,其主流模型主要有SSD[5]、YOLO[6-10]等。ZHU 等[11]采用优化的Faster R-CNN模型对不同成熟度蓝莓进行识别,对成熟果、半成熟果和未成熟果的识别准确率可达到 97%、95%、92%;龙洁花等[12]利用跨阶段局部网络与Mask R-CNN模型中的残差网络相融合的方式提升了不同成熟度番茄果实的识别精度。虽然双阶段检测模型有较高的识别精度,但模型的参数量和计算量较大,检测速度较慢,难以完成实时检测任务。与双阶段检测模型相比,单阶段目标检测模型具更快的检测速度和更少模型参数量,能更加适用于在果园复杂环境。李善军等[13]通过改进的SSD模型对柑橘进行识别,该方法以ResNet18作为SSD的特征提取网络,改进后的模型提高了柑橘的识别精度缩短了平均检测时间。TIAN等[14]利用改进的YOLOv3模型对不同生长阶段的苹果进行识别,该方法以DenseNet作为特征提取网络,提高了苹果在自然环境中的检测精度。王立舒等[15]通过在YOLOv4-Tiny模型中添加CBAM注意力机制的方法提高了蓝莓在遮挡、光照不均的情况的识别速度与精度。范万鹏等[16]利用改进的YOLOv5s模型实现了对莲田环境下不同成熟期莲蓬的精准识别,平均精度可达98.3%,LYU 等[17]基于YOLOv5提出了一种轻量级的目标检测模型,在YOLOv5模型中添加注意力模块以及修改损失函数,提高自然环境中绿色柑橘识别准确率。

尽管上述研究提出了很多关于农作物果实检测的算法,也取得了较大的突破,但对于目标密集且存在遮挡场景的情况考虑较少。在果园环境中,新梅生长环境复杂、果实重叠、受枝叶遮挡现象严重,模型容易漏检导致识别不准。针对上述问题,本文以YOLOv5s模型作为基础模型,提出了一种基于YOLOv5s改进的新梅目标检测方法。通过对模型骨干网络、头部网络以及损失函数进行改进从整体上提高模型的检测精度以及检测速度,从而为新梅采摘机器人的研发提供理论依据和技术支持。

1. 材料与方法

1.1 数据来源

本研究所用新梅数据采集于伊犁哈萨克自治州察布查尔县新梅种植园,采集日期为2023年8—9月,采集时间为10: 00—18: 00,采集设备为iphone13。对不同光照、不同姿态、不同遮挡情况下的单目标新梅以及多目标新梅进行采集,共采集

3820 幅4032 ×3024 像素的新梅图像,去除因拍摄以及天气原因引起的模糊不清图像,得到3200 张清晰新梅图像,图像样例如图1所示。1.2 数据集的扩充与标注

为了增加图像数据的多样性以及检测模型的鲁棒性,提高模型在复杂环境下对新梅的检测能力,避免因数据不足产生的过拟合现象,对图像数据进行数据增强处理。数据增强分为离线增强和Mosaic在线增强两种,增强效果如图2所示。离线增强主要是增加数据集样本的数量,主要包括翻转、镜像变换、加噪声、亮度调节等操作,将新梅数据集扩充到

4700 幅。利用LabelImg 标注工具对新梅数据集进行手动标注,采用矩形框进行标注,在标注时根据新疆维吾尔族自治区标准DB65/T4475-2021将标注新梅的类型分为3类,包括未成熟新梅(underripe xinmei)、成熟新梅(ripe xinmei)、病虫新梅(disease xinmei)。其中未成熟新梅1558 幅、成熟新梅1573 幅、病虫新梅1569 幅。随后将4700 幅新梅图像随机划分3部分,其中训练集(3760 幅)、验证集(640幅)、测试集(300幅)。Mosaic在线增强是在模型训练时随机挑选4幅新梅图片按照随机缩放,随机裁剪、随机分布的方式拼接在一起,组成一张新的图片,以增加新梅图像数据在训练时的多样性,从而提高模型的泛化能力,增强效果如图2 g~2 h所示。Mosaic在线增强过程中,随机挑选的新梅图像组合保留各自特征的同时,能够避免高相似度图片的出现,极大地丰富了新梅图像的背景,更加真实地模拟果园环境,使得模型的适应性更好。

1.3 SFF-YOLOv5s模型的构建

新梅果实目标较小、树枝叶茂密,大量新梅果实被树叶和树干遮挡,从而导致新梅关键特征信息被遮挡,模型因此存在误检情况;除此之外,新梅果实重叠较多,且果实之间重叠面积较大,导致模型漏检,为了提高树干树叶遮挡情况下新梅的检测能力,首先在模型骨干网络C3模块中引入CA注意力机制,以增强网络对新梅关键特征信息的提取能力,并且降低模型的参数量。其次为了提高对重叠果实的识别能力,在模型Neck层引入加权双向特征金字塔网络,加强不同特征层之间的融合能力,缓解因果实重叠造成的识别不准的问题,提高模型的检测精度。最后引入SIoU损失函数,提高模型预测框和真实框重合能力,改进后的模型如图3所示。

1.3.1 添加注意力机制

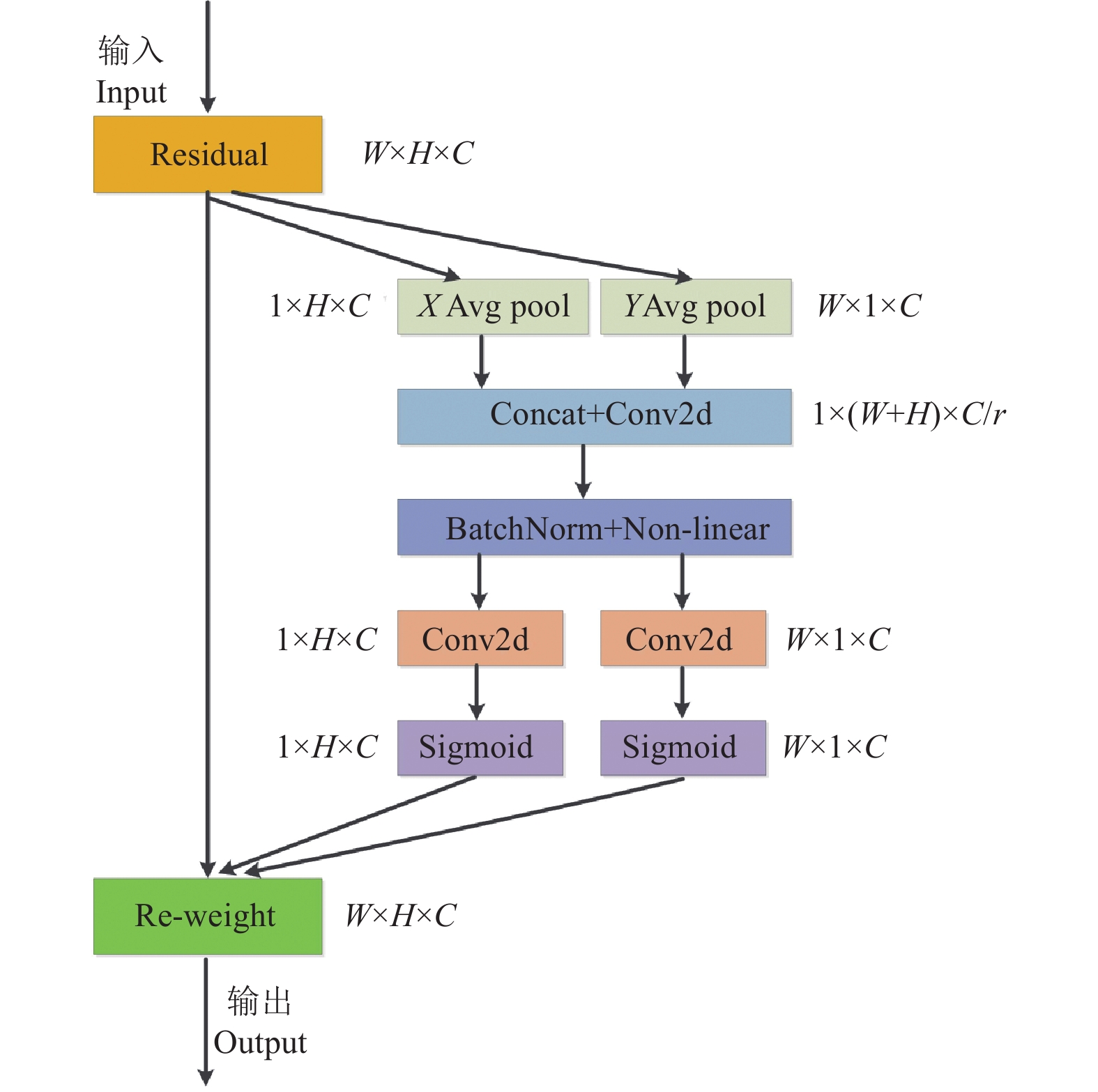

为了提高模型在复杂环境下对新梅关键特征的提取能力,减少背景因素的干扰,引入CA注意力机制[18-19],CA注意力机制不仅灵活、轻量,而且也可以避免产生大量的计算开销,其结构如图4所示。

CA注意力机制首先在X和Y两个方向上对输入的特征图进行池化处理,得到双向特征图,之后将两个特征图融合在一起,利用1×1卷积进行降维操作,生成通道数为C/r的特征图F,再通过批量归一化和非线性激活函数处理来生成特征图f,之后再将特征图f分为X和Y两个方向的特征图,利用1×1的卷积核将两个特征图的通道数变换为输入特征图的通道数C,其次通过Sigmoid 激活函数分别求取X和Y的两个方向的特征图注意力权重gw和gh,并对其进行乘法加权运算,得到带有注意力权重的X和Y两方向上的特征图。

本文对YOLOv5s模型改进时,通过Backbone骨干网络中C3模块与CA注意力机制相结合,利用CABottleneck替换原始C3模块中的Bottleneck,形成C3CA模块。

1.3.2 加权双向特征金字塔网络

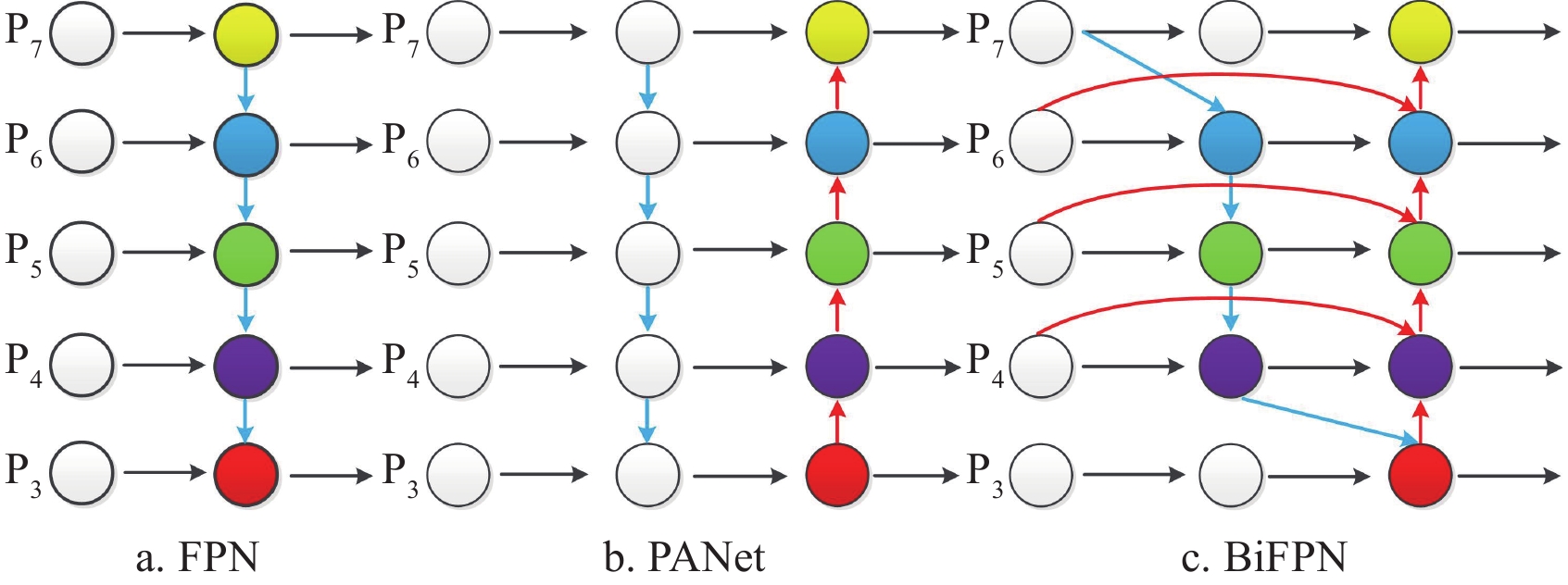

目前,YOLOv5s算法中Neck层采用特征网络结构是路径聚合网络(path aggregation network,PANet)[20],结构如图5b所示,该网络是在特征金字塔网络(feature pyramid network,FPN)[21]的基础上发展而来,与FPN网络相比PANet网络多了一个自下而上的特征融合路径,这一路径能够使底层图像特征信息传递高层进行充分利用,避免了FPN只有单一自上向下的传递。FPN和PANet在融入不同的输入特征时,只能进行简单总结,没有对特征进行区分,由于不同的输入特征图像具有不同的分辨率,因此FPN网络和PANet网络在进行特征融合时会忽略一些重要特征。为了解决这一问题,本文引进一种简单而高效的加权双向特征金字塔网络(bi-directional feature pyramid network,BiFPN)[22],结构如图5c所示,用来替换原 YOLOv5s中的 PANet, BiFPN网络是在PANet网络基础上改进而来,BiFPN网络通过引入可学习的权重对不同输入特征的重要性进行学习,并且重复使用自上向下和自下向上的多尺度特征融合方法,这种加权特征和多尺度特征融合方式能够有效解决传统特征网络中特征融合效果不理想等问题,能够实现更高效的特征融合。BiFPN网络在特征融合时,不同分辨率的特征图对融合输入具有不同的贡献度不同,因此BiFPN网络利用快速归一化融合模块进行加权特征融合,如式(1)所示:

O=∑iwiϵ+∑jwj⋅Ii (1) 式中O为加权融合后的权重,wi为输入特征Ii对应的学习权重,通过激活函数ReLU 来保证wi≥0,ϵ表示初始学习率,为了避免数据不稳定,通常设置为

0.0001 。1.3.3 损失函数改进

YOLOv5s模型所采用损失函数为CIoU损失函数[23],CIoU损失函数主要是对预测框与真实框的距离、重叠面积以及长宽比进行关注,但对预测框与真实框方向间的失配问题没有考虑,从而使模型收敛速度较慢、收敛效率较低;为了解决上述问题,本研究引入SIoU损失函数[24],SIoU引入真实框和预测框之间的向量角度,使得预测框和真实框重合的更好,进一步提升模型的收敛速度。SIoU损失函数定义如下:

LossSIoU=1−IoU+Δ+Ω2 (2) 式中IoU表示预测框B与真实框BGT之间交并比,Δ表示距离损失,Ω表示形状损失。

IoU=|B∩BGT||B∪BGT| (3) Δ=∑t=x,y(1−e−γρt)=2−e−γρx−e−γρy (4) Ω=∑t=w,h(1−e−wt)θ=(1−e−ww)θ+(1−e−wh)θ (5) 式中θ表示控制对形状损失的关注程度,θ取值一般在2到6之间,ρx、ρy、γ、ww、wh分别满足下式:

ρx=(bgtcx−bcxcw)2 (6) ρy=(bgtcy−bcych)2 (7) γ=2−Λ (8) ww=|w−wgt|max (9) {w_h} = \frac{{\left| {h - {h^{gt}}} \right|}}{{\max \left( {h,{h^{gt}}} \right)}} (10) 式中 {b}_{{c}_{x}}^{gt} 、 {b}_{{c}_{y}}^{gt} 为真实框的中心坐标, {c}_{w} 、 {c}_{h} 为包含真实框和预测框的最小外接矩形的宽和高, {b}_{{c}_{x}} 、 {b}_{{c}_{y}} 为预测框的中心坐标, \varLambda 表示角度损失, w 和 h 分别表示预测框宽和高, {w}^{gt} 和 {h}^{gt} 分别表示真实框的宽和高。

1.4 试验环境与评价标准

1.4.1 试验环境

本文试验环境操作系统为64位Windows 10,内存为32G,显卡驱动为NVIDIA GeForce RTX 4080,搭载13 th Gen Intel(R) Core(TM) i7-13700KF处理器,采用Pytorch 1.12.1版本的深度学习框架,CUDAN版本为12.0,Python版本为3.7。

模型输入尺寸为640×640像素,训练批次(batch_size)设置为16,初始学习率设为0.01,动量(momentum)设置为0.937,权值衰减系数为

0.0005 ,采用随机梯度下降法(stochastic gradient descent,SGD)优化模型参数,迭代次数为200。1.4.2 评价指标

为准确评估模型性能,本文以精确率(precision,P)、召回率(recall,R)、平均精度均值(mean average precision,mAP)、参数量(parameters)、计算量(computation amount)、模型权重以及检测时间(t)作为模型的评价指标。

2. 结果与分析

2.1 不同注意力机制对比试验分析

为了分析不同注意力机制的性能表现,本文对注意力机制进行对比试验,将SSF-YOLOv5s模型中的CA注意力机制替换为SENet(squeeze-and-excitation networks)[25]、CBAM(convolutional block attention module)[26]、ECA(efficient channel attention)[27]3种注意力机制。所得结果如表1所示。

表 1 不同注意力机制性能对比Table 1. Comparative performance tests of different attention mechanisms注意力机制

Attention mechanisms精确率

Precision P/%平均精度均值 Mean average precision mAP/% 模型权重

Models weights/MB检测时间

Detection

time/msSENet 90.6 97.2 13.6 13.2 CBAM 92.3 97.3 13.6 14.1 ECA 90.2 97.4 13.5 13.6 CA 93.4 97.7 13.6 12.1 由表1可知,在引入不同注意力机制时模型的权重大小基本相同,其中引入CA注意力机制SSF-YOLOv5s模型性能表现最好,与引入SENet、CBAM、ECA注意力机制相比,采用CA注意力机制模型的精确率分别提升2.8、1.1、3.2个百分点,mAP分别提升0.5、0.4、0.3个百分点,检测时间最短。通过上述试验分析,采用CA注意力机制对新梅关键特征信息的提取能力优于其余3种注意力机制,原因在于CA注意力机制能够将新梅位置信息嵌入到通道注意力中,能同时考虑到新梅通道信息和方向相关的位置信息,使得模型能够更加关注关键特征信息,从而有效提高新梅检测精度。

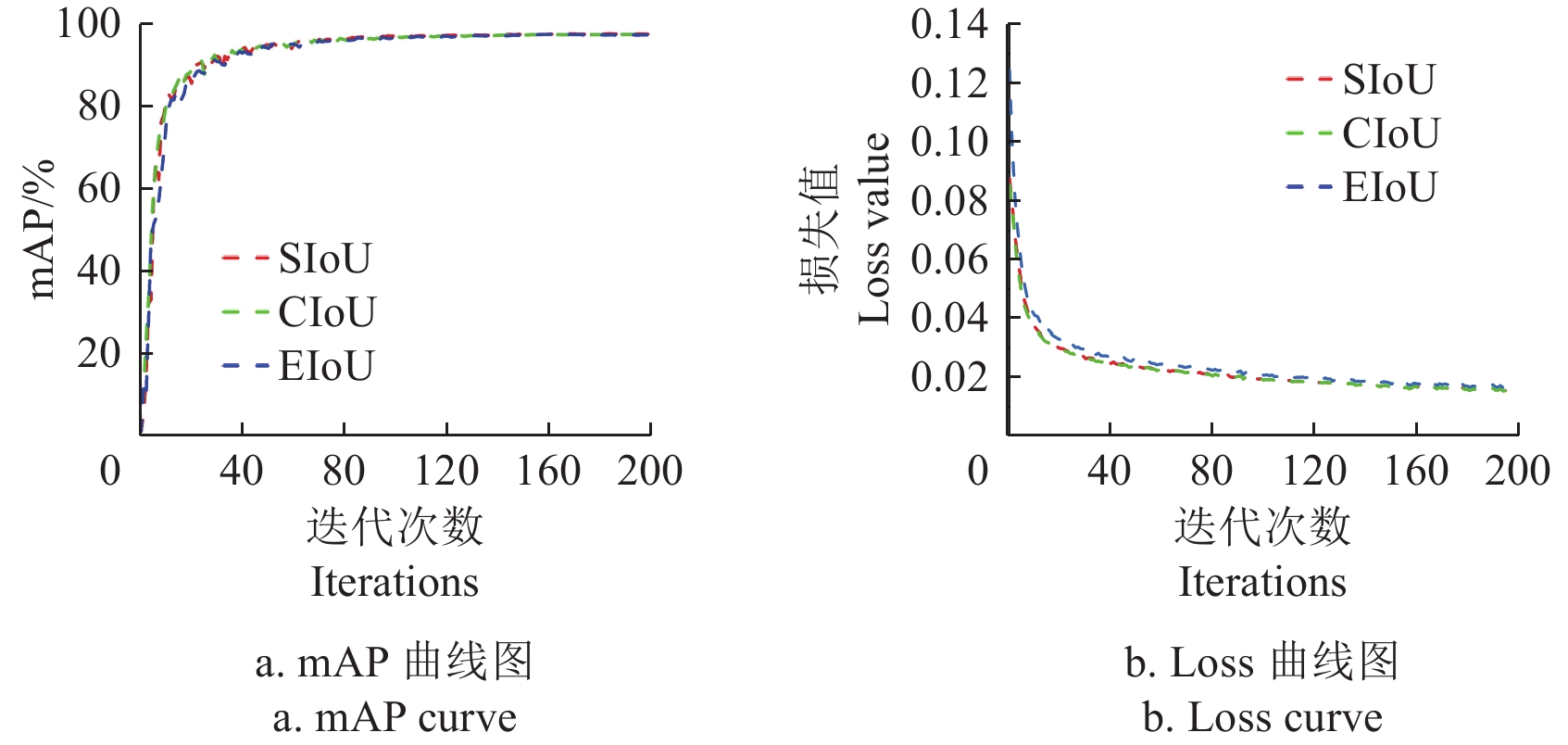

2.2 不同损失函数性能对比

为了分析不同损失函数在新梅数据集上的性能表现,本试验对SSF-YOLOv5s模型采用SIoU、 CIoU、EIoU[28]损失函数,进行试验对比分析。图6a为不同损失函数mAP曲线图,由图6a可知3种损失函数mAP曲线在185轮以后均趋于水平,在相同试验条件下,采用SIoU损失函数的模型mAP最高,采用CIoU和EIoU作为损失函数时, mAP略低于SIoU损失函数。三种损失函数训练损失曲线图如图6b所示,可以看出采用EIoU作为损失函数时收敛速度最慢,损失值最高;SIoU和CIoU作为损失函数时,收敛速度相近,SIoU损失值略低于CIoU;原因在于SIoU损失函数引入真实框和预测框之间的向量角度,能够有效减少了预测框回归过程产生的损失,加速模型的收敛速度,从而提升模型的精度。

2.3 消融试验性能分析

为了验证3种改进方法在新梅数据集上的有效性,本文设计了5组消融试验,由表2所知,与原始YOLOv5s模型相比,添加CA注意力机制后,模型的mAP、精确率、召回率分别提升了0.2、0.2、1.2个百分点,同时模型的参数量从7.02 M减少到6.41 M,表明在C3模块中引入CA注意力机制能够加强模型对通道信息和空间位置信息的关注,增强模型对新梅果实特征的提取能力,同时也能一定程度上减少模型参数。

表 2 消融试验结果对比Table 2. Comparison of ablation test resultsCA BiFPN SIoU 平均精度

Average precision AP%mAP/

%P/% R/% 参数量

Parameters /M未成熟

新梅

Underripe Xinmei成熟新梅

Ripe Xinmei病虫新梅

Disease Xinmei× × × 94.6 98.5 98.1 97.1 91.4 92.8 7.02 √

√×

√×

×94.5

95.298.5

98.798.9

98.897.3

97.691.6

91.894.0

94.56.41

6.56√ × √ 95.0 98.5 98.7 97.4 92.2 94.0 6.41 √ √ √ 95.4 98.7 98.9 97.7 93.4 92.9 6.56 注:“√” 表示使用此模块,“×” 表示不使用此模块。 Note:"√" represents use this module, "×" represents do not use this module. 引入加权双向特征金字塔网络与只引入CA注意力机制相比,模型的mAP、精确率、召回率分别提升了0.3、0.2、0.5个百分点,参数量略有增加,表明加权双向特征金字塔网络的引入加强了模型不同特征层之间的融合能力,提高模型对重叠果实的识别能力,在轻微增加参数量的情况下,有效提升模型的识别精度。用SIoU损失函数替换加权双向特征金字塔网络(BiFPN),模型的召回率和mAP略有下降,精确率有所增加,表明SIoU损失函数的引入使得模型预测框和真实框重合效果更好,从而有效提升模型的精确率。同时引入3种改进策略,模型的准确率,召回率和平均精度均值分别达到93.4%、92.9%、97.7%,与原始模型相比,mAP、精确率分别提升了0.6、2.0个百分点,召回率略有提升,参数量也减少了0.46 M。综合消融试验,同时添加3种改进策略能够使识别效果达到最佳,模型也能更加轻量化。

2.4 不同检测模型性能分析

为了更好地体现本文改进模型的优越性,将改进模型与当下主流目标检测模型Faster R-CNN、YOLOv3、YOLOv4、YOLOv5s、YOLOv7、YOLOv8s进行对比分析,采用相同数据集进行试验,结果如表3所示。

表 3 不同检测模型性能对比Table 3. Performance comparison of different detection models模型

ModelP/% mAP/% 计算量

Computation

amount /G模型权重

Models weights/MB检测时间

Detection

time /msFaster R-CNN 76.4 94.1 370.4 108 34.7 YOLOV3 89.5 90.9 66.2 235 10.5 YOLOv4 85.7 84.6 60.5 244 14.5 YOLOv5s 91.4 97.1 16.0 14.5 13.3 YOLOv7 93.9 97.3 103.2 71.3 21.6 YOLOv8s 91.3 97.2 28.7 21.4 9.6 SSF-YOLOv5s 93.4 97.7 14.6 13.6 12.1 由表可知,SSF-YOLOv5s模型与Faster R-CNN、YOLOv3、YOLOv4、YOLOv5s、YOLOv8s模型相比,精确率分别提升了17.0、3.9、7.7、2.0、2.1个百分点,mAP分别提升了3.6、6.8、13.1、0.6、0.5个百分点;原因在于Faster R-CNN的特征网络仅能获取分辨率较小的单层特征图,不利于小目标新梅果实的检测,导致模型精确率仅有76.4%,YOLOv3、YOLOv4模型能够利用特征金字塔网络有效提高模型的精确率,YOLOv8s通过在主干网络引入梯度流更为丰富的 C2f 模块有效提高了模型的平均精度均值。SSF-YOLOv5s模型与YOLOv7模型相比,mAP提升了0.4个百分点,精确率降低了0.5个百分点;分析原因在于YOLOv7模型通过引入重参数化网络以及高效层聚合网络提高了模型的精确率。在模型轻量化方面,SSF-YOLOv5s模型的计算量、模型权重分别为14.6 G、13.6 MB,与其余6种模型相比均为最低,在检测时间上,SSF-YOLOv5s模型检测时间为12.1 ms,与YOLOv3、YOLOv8s模型相比检测时间分别增加了1.6、2.5 ms,与其余4种模型相比检测时间最短。综上所述,SSF-YOLOv5s模型与其余6种模型相比mAP表现最优,同时模型计算量最少,模型权重最低,检测时间也满足实时检测的需求,在满足轻量化的同时具有较高的检测精度,因此,SSF-YOLOv5s模型能够满足果园复杂下对新梅进行快速准确的识别。

2.5 模型识别效果分析

利用YOLOv5s模型和SSF-YOLOv5s模型在测试集上进行检测效果对比,结果如图7所示。可以看出,在对单目标以及多目标进行识别时,两种模型都能准确识别,但SSF-YOLOv5s模型检测置信度较高,在果实重叠、树叶遮挡、远距离情况下,YOLOv5s模型均出现漏检的情况,而SSF-YOLOv5s模型能降低漏检现象。综上所述,SSF-YOLOv5s模型在复杂环境下对新梅果实有较好的识别效果,适用于实际果园下对新梅果实的检测。

![]() 图 7 模型检测效果对比注:检测边界框上方 underripe Xinmei、ripe Xinmei 和 disease Xinmei分别表示未成熟新梅、成熟新梅和病虫新梅,蓝色框为漏检新梅。Figure 7. Comparison of model detection effectNote: On the top of the detection boundary box, underripe Xinmei, ripe Xinmei, and disease Xinmei indicate immature Xinmei, ripe Xinmei, and disease Xinmei, respectively. The blue box indicates missing Xinmei.

图 7 模型检测效果对比注:检测边界框上方 underripe Xinmei、ripe Xinmei 和 disease Xinmei分别表示未成熟新梅、成熟新梅和病虫新梅,蓝色框为漏检新梅。Figure 7. Comparison of model detection effectNote: On the top of the detection boundary box, underripe Xinmei, ripe Xinmei, and disease Xinmei indicate immature Xinmei, ripe Xinmei, and disease Xinmei, respectively. The blue box indicates missing Xinmei.3. 结 论

为了实现果园复杂环境下新梅果实快速准确的检测,本文基于YOLOv5s模型进行改进,提出了一种SSF-YOLOv5s新梅检测模型,主要结论如下:

1)本文将CA注意力机制添加到C3模块中,模型的平均精度均值提高0.2个百分点,模型的参数量也有所下降;在此基础上,在Neck层引入加权双向特征金字塔网络,模型的平均精度均值比原始YOLOv5s提升了0.5个百分点;最后使用SIoU作为损失函数,加快了模型收敛速度,使得模型的准确率比原始模型提升了2.0个百分点。

2)本文提出的SSF-YOLOv5s模型与Faster R-CNN、YOLOv3、YOLOv4、YOLOv5s、YOLOv7、YOLOv8s模型相比,平均精度均值分别提升了 3.6、6.8、13.1、0.6、0.4、0.5 个百分点,模型权重仅为13.6MB,检测速度也能够满足新梅实时检测要求。

3)本文SSF-YOLOv5s模型平均精度均值为97.7%,准确率为93.4%, 召回率为92.9%,与原始模型相比均有所提升,检测时间为12.1 ms,比原始模型相比缩短了1.2 ms,同时模型的计算量以及模型权重较小,有利于在可移动设备上进行部署。综上所述,SSF-YOLOv5s模型能够满足在果园环境下对新梅进行快速检测的需求,可以为后续新梅智能化采摘提供技术依据。

-

图 7 模型检测效果对比

注:检测边界框上方 underripe Xinmei、ripe Xinmei 和 disease Xinmei分别表示未成熟新梅、成熟新梅和病虫新梅,蓝色框为漏检新梅。

Figure 7. Comparison of model detection effect

Note: On the top of the detection boundary box, underripe Xinmei, ripe Xinmei, and disease Xinmei indicate immature Xinmei, ripe Xinmei, and disease Xinmei, respectively. The blue box indicates missing Xinmei.

表 1 不同注意力机制性能对比

Table 1 Comparative performance tests of different attention mechanisms

注意力机制

Attention mechanisms精确率

Precision P/%平均精度均值 Mean average precision mAP/% 模型权重

Models weights/MB检测时间

Detection

time/msSENet 90.6 97.2 13.6 13.2 CBAM 92.3 97.3 13.6 14.1 ECA 90.2 97.4 13.5 13.6 CA 93.4 97.7 13.6 12.1 表 2 消融试验结果对比

Table 2 Comparison of ablation test results

CA BiFPN SIoU 平均精度

Average precision AP%mAP/

%P/% R/% 参数量

Parameters /M未成熟

新梅

Underripe Xinmei成熟新梅

Ripe Xinmei病虫新梅

Disease Xinmei× × × 94.6 98.5 98.1 97.1 91.4 92.8 7.02 √

√×

√×

×94.5

95.298.5

98.798.9

98.897.3

97.691.6

91.894.0

94.56.41

6.56√ × √ 95.0 98.5 98.7 97.4 92.2 94.0 6.41 √ √ √ 95.4 98.7 98.9 97.7 93.4 92.9 6.56 注:“√” 表示使用此模块,“×” 表示不使用此模块。 Note:"√" represents use this module, "×" represents do not use this module. 表 3 不同检测模型性能对比

Table 3 Performance comparison of different detection models

模型

ModelP/% mAP/% 计算量

Computation

amount /G模型权重

Models weights/MB检测时间

Detection

time /msFaster R-CNN 76.4 94.1 370.4 108 34.7 YOLOV3 89.5 90.9 66.2 235 10.5 YOLOv4 85.7 84.6 60.5 244 14.5 YOLOv5s 91.4 97.1 16.0 14.5 13.3 YOLOv7 93.9 97.3 103.2 71.3 21.6 YOLOv8s 91.3 97.2 28.7 21.4 9.6 SSF-YOLOv5s 93.4 97.7 14.6 13.6 12.1 -

[1] 王瑞雪,朱立成,赵博,等. 农业机器人技术现状及典型应用[J]. 农业工程学报,2022,12(4):5-11. WANG Ruixue, ZHU Licheng, ZHAO Bo, et al. Current status and typical applications of agricultural robot technology[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2022, 12(4): 5-11. (in Chinese with English abstract)

[2] GIRSHICK R, DONAHUE J, DARRELL T, et al. Region-based convolutional networks for accurate object detection and segmentation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015 , 38(1): 142-158.

[3] REN S, HEK, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137-1149.

[4] HE K, GKIOXARI G, DOLLÁR P, et al. Mask R-CNN[C]//Proceedings of the IEEE international conference on computer vision. Venice, Italy: 2017 IEEE International Conference on Computer Vision, 2017: 2961-2969.

[5] LIU W, ANGUELOV D, ERHAN D, et al. SSD: Single shot multibox detector [C]//European Conference on Computer Vision. Berlin, Germany: Springer, 2016: 21-37.

[6] REDMON J, DIVVALA S, GIRSHICK R, et al. You only look once: Unified, real-time object detection[C] //Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA, 2016: 779-788.

[7] REDMON J, FARHADI A. Yolov3: An incremental improvement[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Salt Lake, USA, 2018: 1125-1131.

[8] BOCHKOVSKIY A, Wang C, HONG Y. Yolov4: Optimal speed and accuracy of object detection[C] //Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Seattle, USA, 2020.

[9] Ultralytics. Yolov5[EB/OL]. (2020-06-26) [2023-12-25]. https: github. com/ultralytics/yolov5.

[10] WANG C W, BOCHKOVSKIY A, LIAO H Y M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Vancouver, BC, Canada: 2023 IEEE Conference on Computer Vision and Pattern Recognition, 2023: 7464-7475.

[11] ZHU X, MA H, JI J T, et al. Detecting and identifying blueberry canopy fruits based on Faster R-CNN[J]. Journal of Southern Agriculture, 2020, 51(6): 1493-1501.

[12] 龙洁花,赵春江,林森,等. 改进Mask R-CNN的温室环境下不同成熟度番茄果实分割方法[J]. 农业工程学报,2021,37(18):100-108. doi: 10.11975/j.issn.1002-6819.2021.18.012 LONG Jiehua, ZHAO Chunjiang, LIN Sen, et al. Segmentation method of the tomato fruits with different maturities under greenhouse environment based on improved Mask R-CNN[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2021, 37(18): 100-108. (in Chinese with English abstract) doi: 10.11975/j.issn.1002-6819.2021.18.012

[13] 李善军,胡定一,高淑敏,等. 基于改进SSD的柑橘实时分类检测[J]. 农业工程学报,2019,35(24):307-313. doi: 10.11975/j.issn.1002-6819.2019.24.036 LI Shanjun, HU Dingyi, GAO Shunmin, et al. Real-time classification detection of citrus based on improved SSD[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2019, 35(24): 307-313. (in Chinese with English abstract) doi: 10.11975/j.issn.1002-6819.2019.24.036

[14] TIAN Y, YANG G, WANG Z, et al. Apple detection during different growth stages in orchards using the improved YOLO-V3 model[J]. Computers and Electronics in Agriculture, 2019, 157: 417-426. doi: 10.1016/j.compag.2019.01.012

[15] 王立舒,秦铭霞,雷洁雅,等. 基于改进YOLOv4-Tiny的蓝莓成熟度识别方法[J]. 农业工程学报,2021,37(18):170-178. doi: 10.11975/j.issn.1002-6819.2021.18.020 WANG Lishu, QIN Mingxia, LEI Jieya, et al. Blueberry ripeness recognition method based on improved YOLOv4-Tiny[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2021, 37(18): 170-178. (in Chinese with English abstract) doi: 10.11975/j.issn.1002-6819.2021.18.020

[16] 范万鹏,刘孟楠,马婕,等. 利用改进的YOLOv5s检测莲蓬成熟期[J]. 农业工程学报,2023,39(18):183-191. FAN Wanpeng, LIU Mengnan, MA Jie, et al. The improved YOLOv5s was used to detect the maturity of lotus seed[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2023, 39(18): 183-191. (in Chinese with English abstract)

[17] LYU S, LI R, ZHAO Y, et al. Green citrus detection and counting in orchards based on YOLOv5-CS and AI edge system[J]. Sensors, 2022, 22(2): 576. doi: 10.3390/s22020576

[18] HOU Q B, ZHOU D Q, FENG J S. Coordinate attention for efficient mobile network design[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual: IEEE, 2021: 13713-13722.

[19] 朱德利,文瑞,熊俊逸. 融合坐标注意力机制的轻量级玉米花丝检测[J]. 农业工程学报,2023,39(3):145-153. ZHU Deli, WEN Rui, XIONG Junyi. Lightweight corn silk detection network incorporating with coordinate attention mechanism[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2023, 39(3): 145-153. (in Chinese with English abstract)

[20] LIU S, QI L, QIN H, et al. Path aggregation network for instance segmentation[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Sait Lake City, UT, USA, 2018: 8759-8768.

[21] LIN T Y, DOLLÁR P, GIRSHICK R, et al. Feature pyramid networks for object detection[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Honolulu, HI, USA, 2017: 2117-2125.

[22] TAN M, PANG R, LE Q V. EfficientDet: Scalable and efficient object detection[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle, WA, USA, 2020: 10778-10787.

[23] LIN T Y, GOYAL P, GIRSHICK R, et al. Focal loss for dense object detection[C]//Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy: 2017: 2980-2988.

[24] GEVORGYAN Z. SIOU loss: More powerful learning for bounding box regression[C]//Computer Vision and Pattern Recognition. New Orleans, 2022: 1-12.

[25] HU J, SHEN L, SUN G. Squeeze-and-excitation networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 42(8): 2011-2023. doi: 10.1109/TPAMI.2019.2913372

[26] WOO S, PARK J, LEE J Y, et al. CBAM: Convolutional block attention module[C]//Proceedings of the European Conference on Computer Vision. Munich, Germany: Springer, 2018: 3-19.

[27] WANG Q, WU B, ZHU P, et al. ECA-Net: Efficient channel attention for deep convolutional neural networks[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2020: 11534-11542.

[28] ZHANG Y F, REN W, ZHANG Z. et al. Focal and efficient IOU loss for accurate bounding box regression[J]. Neurocomputing, 2022, 506: 146-157. doi: 10.1016/j.neucom.2022.07.042

-

期刊类型引用(1)

1. 汤永华,张志鹏,林森,刘兴通,荣弘扬,王腾川. 基于多标签补偿的改进YOLOv8鱼体病害检测方法. 农业工程学报. 2024(23): 227-234 .  本站查看

本站查看

其他类型引用(0)

下载:

下载: