Algorithm for the target detection of phalaenopsis seedlings using lightweight YOLOv8n-Aerolite

-

摘要:

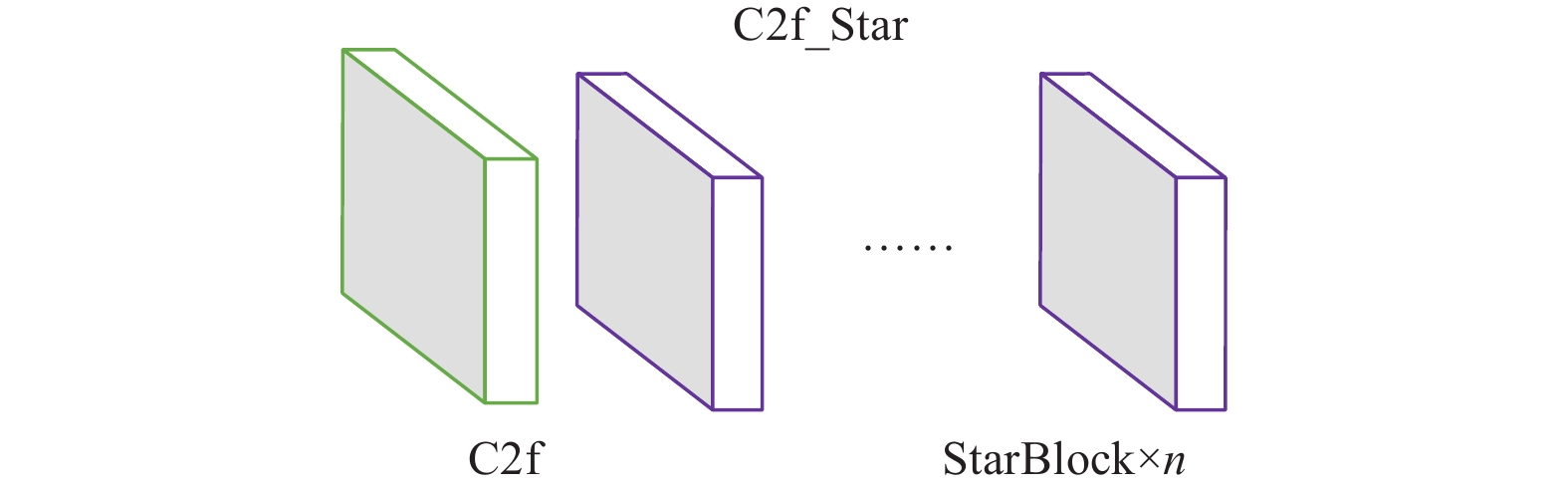

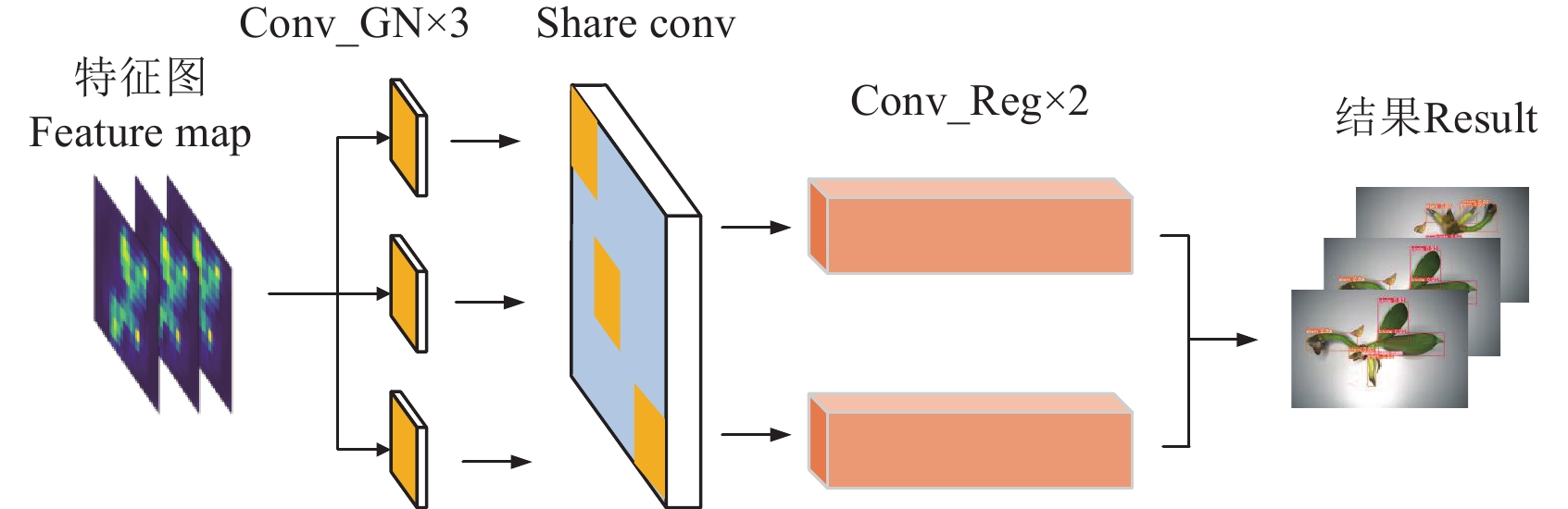

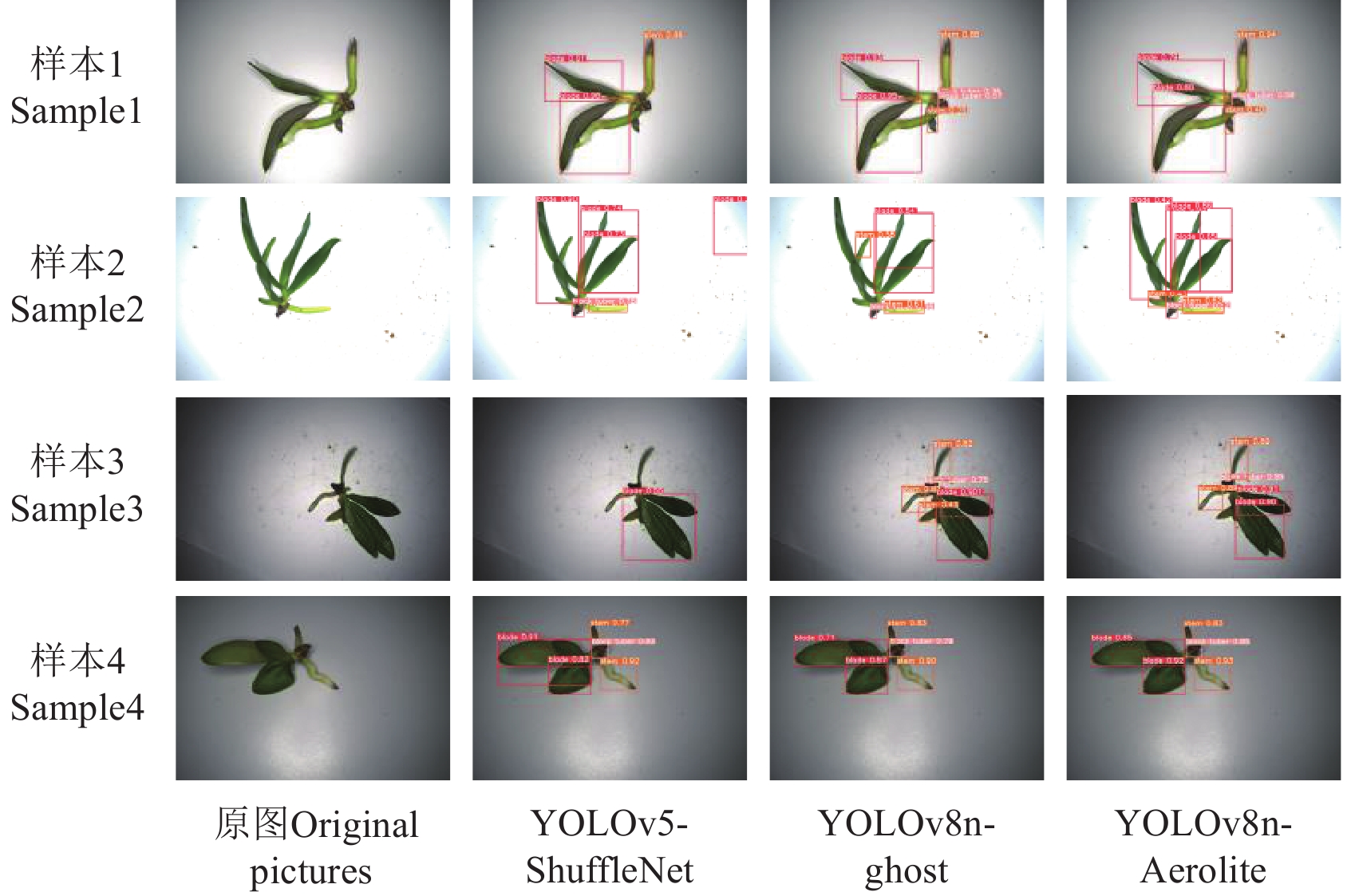

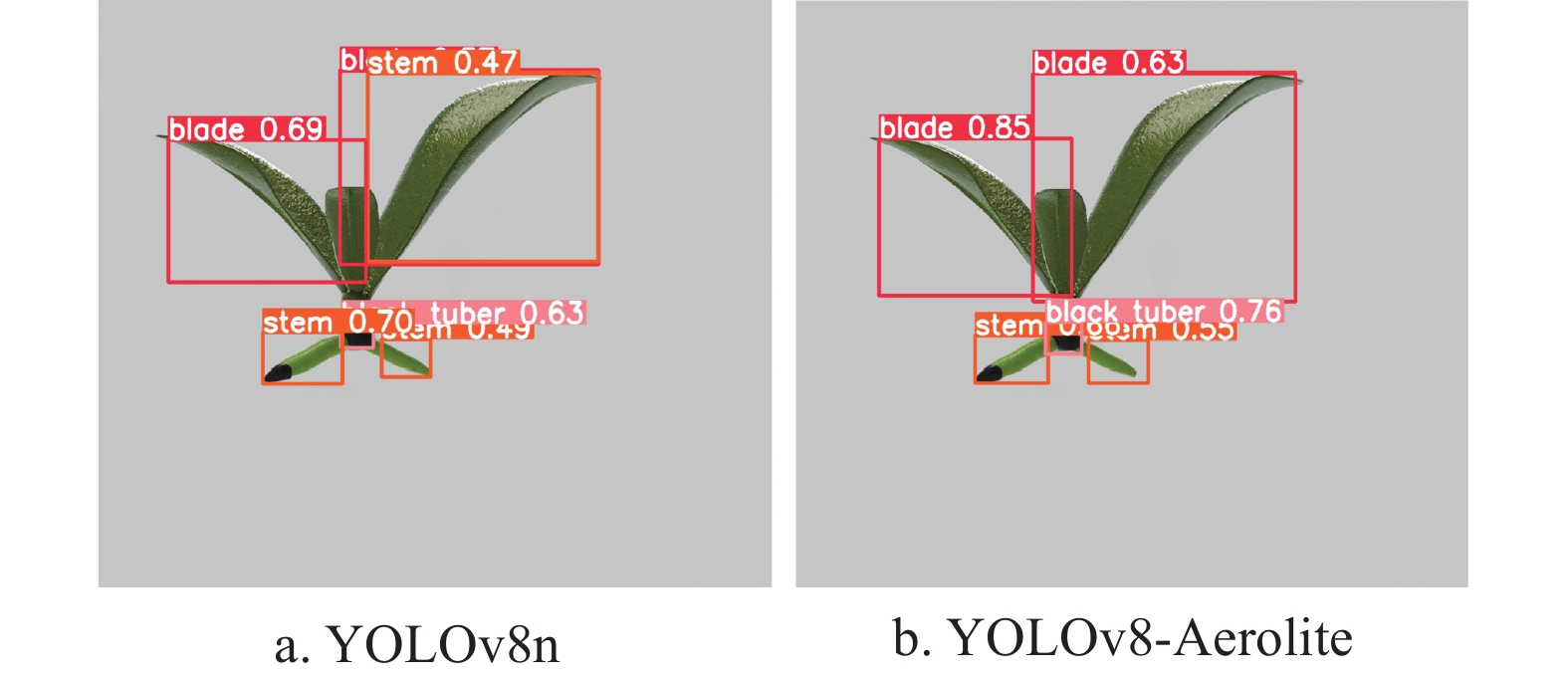

小型植物组织检测对植物自动化培养产业的发展具有重要意义,为了提升蝴蝶兰种苗夹取点视觉检测效率以及解决现有模型参数量较大,检测速度较慢的问题,该研究提出了一种轻量化目标检测算法YOLOv8n-Aerolite。首先,采用StarNet作为主干网络,在此基础上增加嵌入大核可分离卷积的池化层SPPF_LSKA(large-separable-kernel-attention),实现轻量化的同时保证准确率;然后在颈部网络中采用结合StarBlock的C2f_Star模块,提高模型对蝴蝶兰种苗检测的准确率;最后,采用以共享卷积为基础的轻量级检测头Detect_LSCD(lightweight shared convolutional detection head),提升模型对小目标检测的精度和速度。在对蝴蝶兰种苗图像数据集的目标检测试验中,YOLOv8n-Aerolite算法的平均推理速度达到了435.8帧/s,精确度达91.1%,权重文件大小仅为3.1 MB,对于夹取点所在小目标检测精度达91.6%,在种苗夹取试验中成功率为78%,研究结果可为发展小型作物自动化栽培技术提供参考。

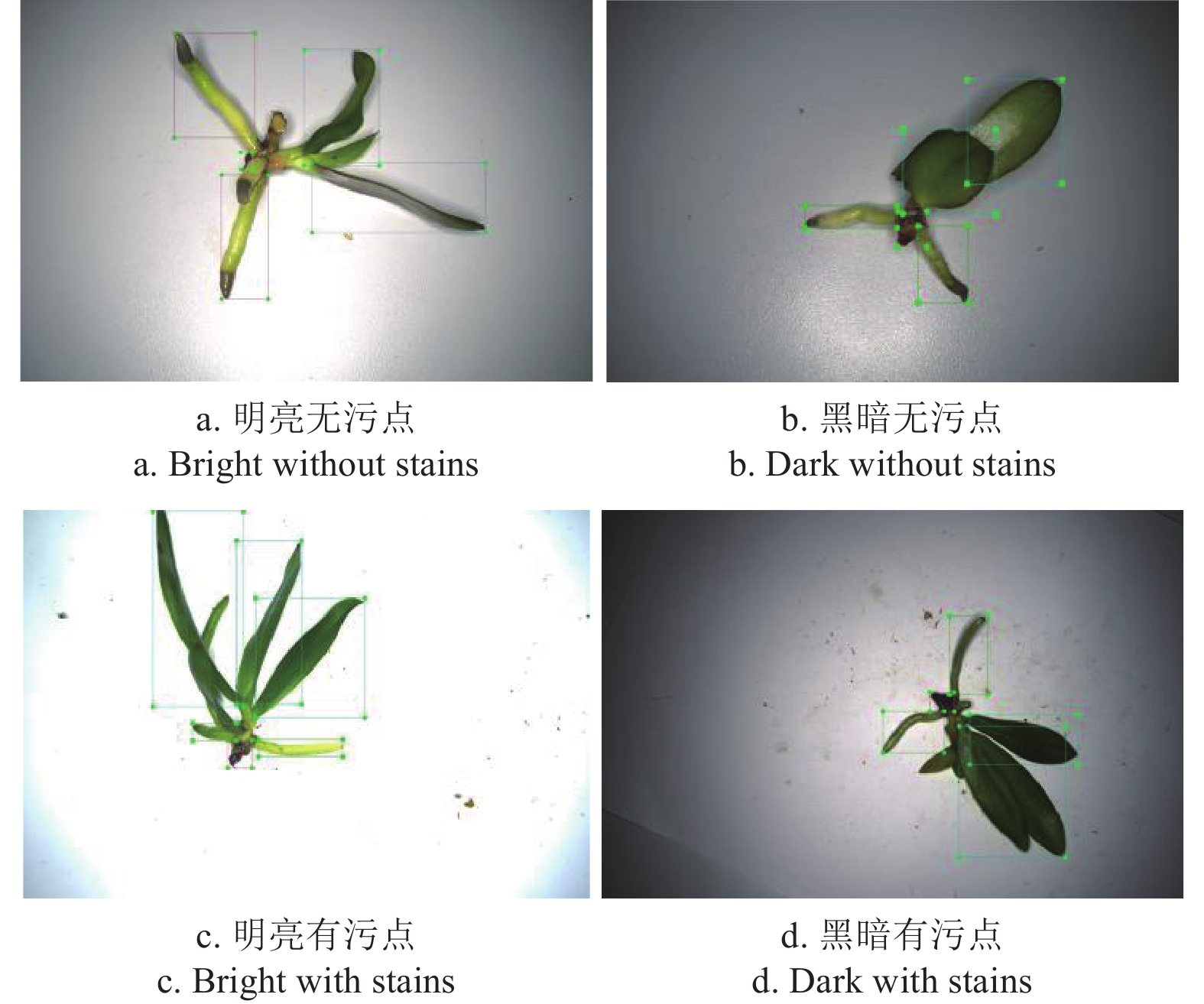

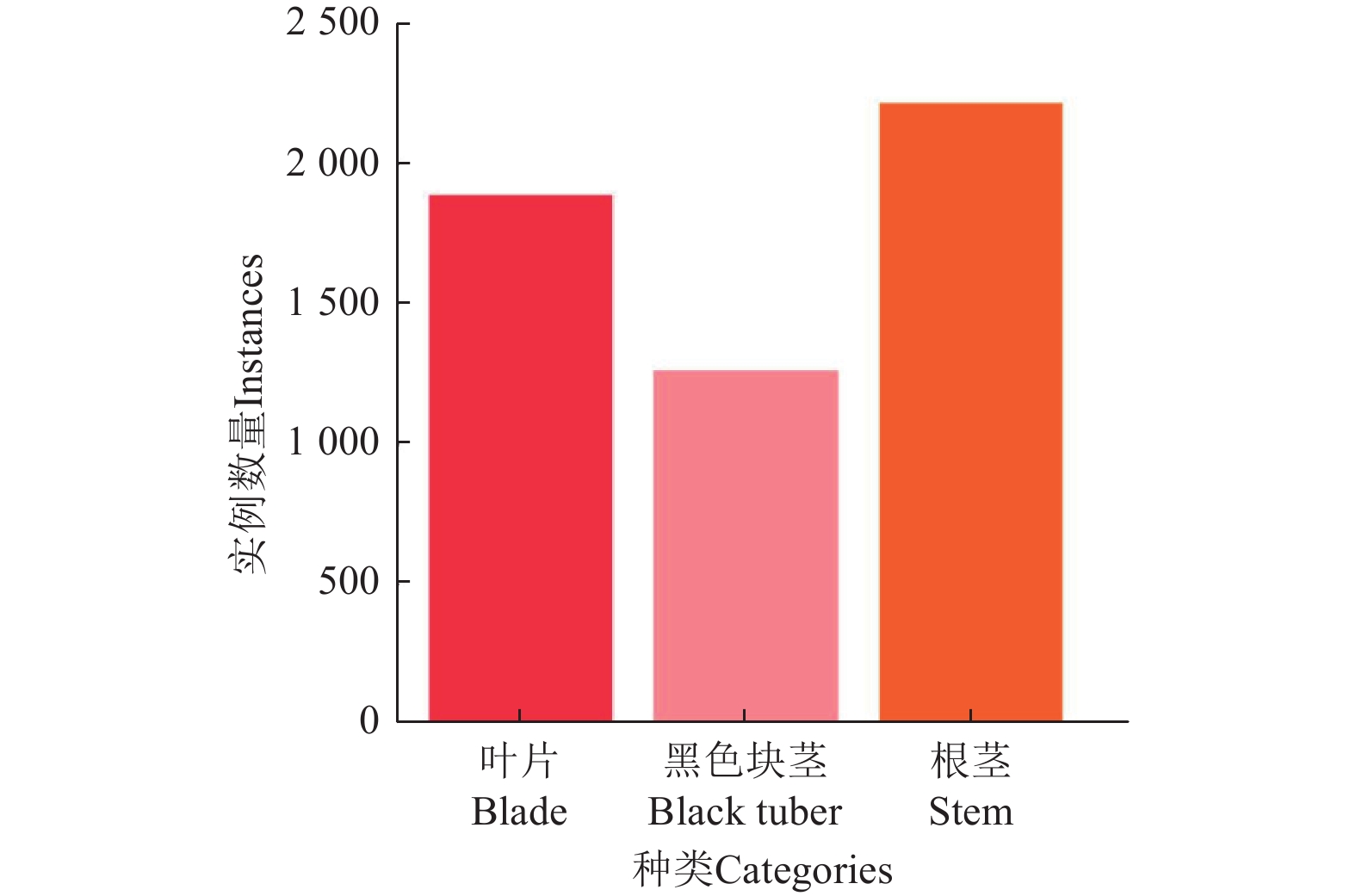

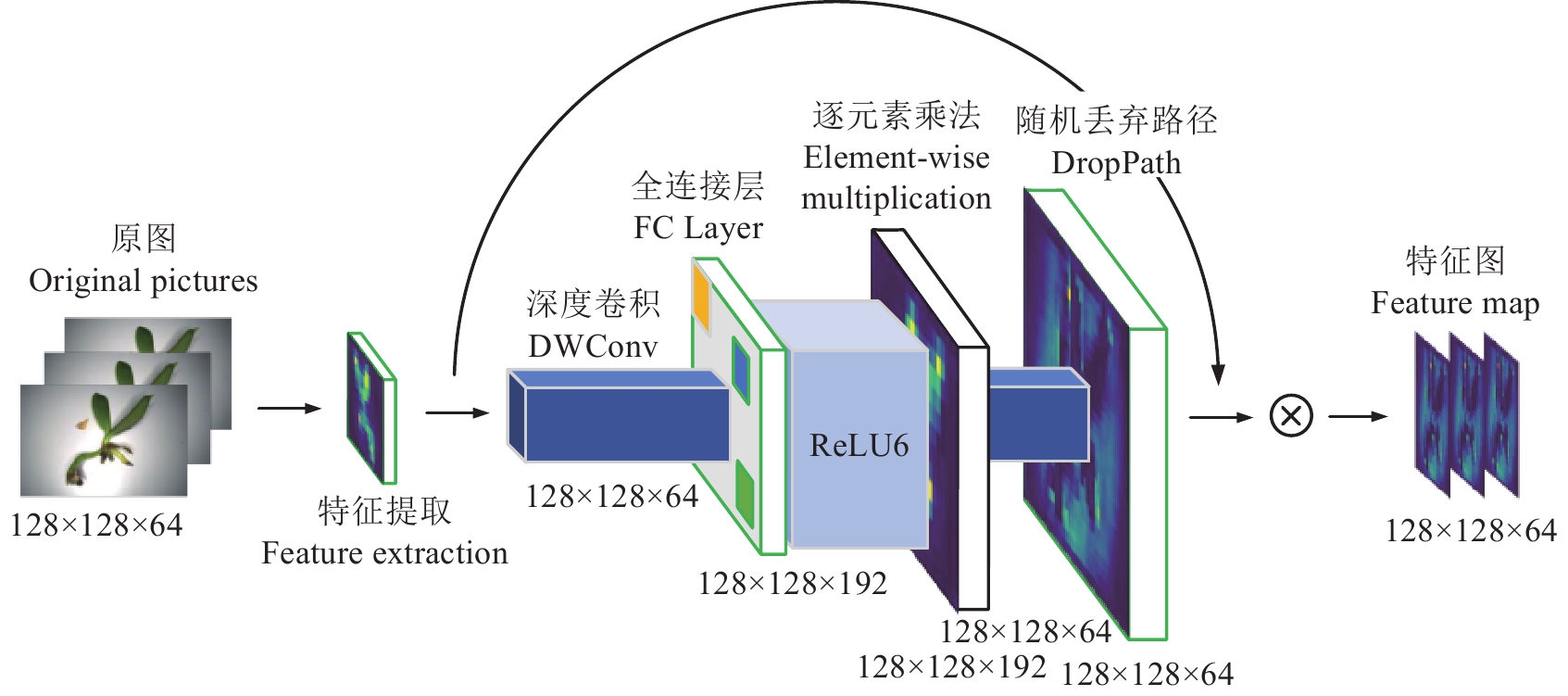

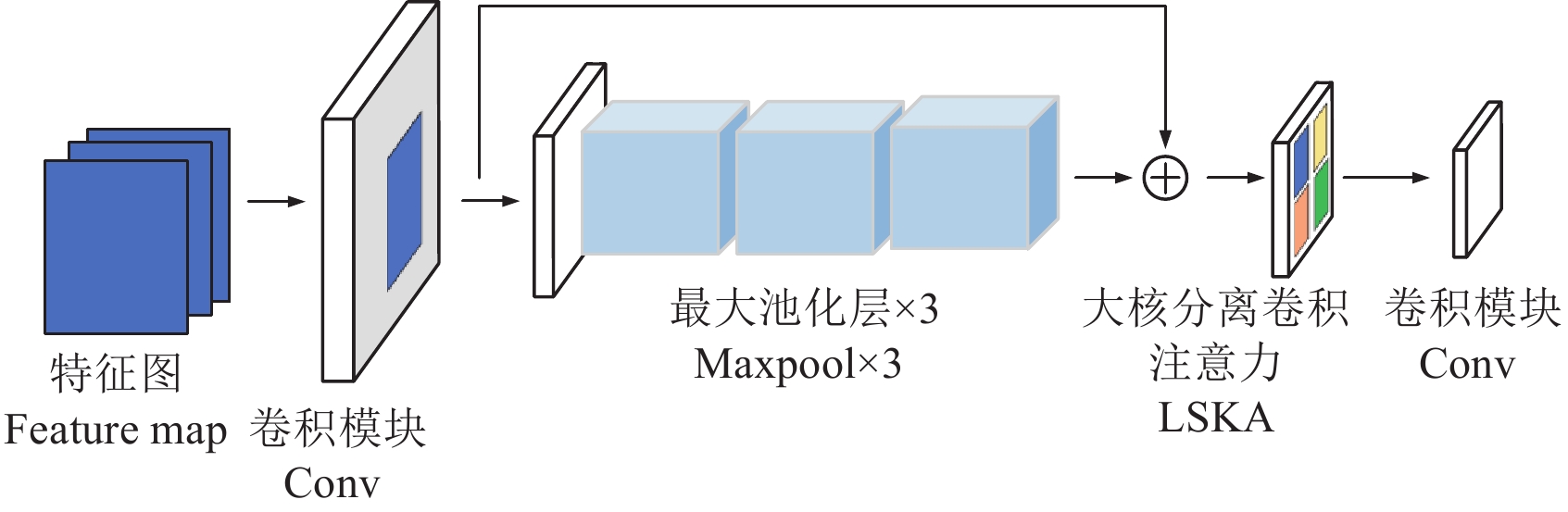

Abstract:This study aimed to improve the efficiency of visual detection for the seedling gripping points in the automated rapid propagation of Phalaenopsis orchids, particularly on edge devices with limited computational resources and storage capacities. A lightweight algorithm of object detection was introduced (named YOLOv8n-Aerolite), in order to balance the high detection accuracy and the low computational complexity. As such, the algorithm was suitable for real-time applications on devices with restricted hardware capabilities. The StarNet was then developed as the backbone network, due to its efficient extraction of various features. An SPPF_LSKA (Large-Separable-Kernel-Attention) layer was incorporated to further optimize the model. The computational demands of the model were significantly reduced to maintain high precision during detection. The large-separable-kernel was designed to enhance the performance of the model, in order to process the key visual features with minimal resource usage. There was a critical advancement for the edge devices. Additionally, a new C2f_Star module was implemented to combine with the StarBlock in the network's neck for better feature fusion. Some finer details were then detected, such as the small and intricate points of seedling gripping. The C2f_Star was also integrated to introduce multi-scale feature processing. The gripping points were distinguished in the dense environments, where the seedlings were closely spaced. The detection head was also redesigned to include a lightweight shared convolutional layer structure, referred to as Detect_LSCD (Lightweight Shared Convolutional Detection Head). There was a notable increase in the detection speed, in order to reduce the overall size of the model. Specifically, the optimizations were fully realized to perform efficiently under resource-limited environments. The improved YOLOv8n-Aerolite algorithm was then tested on the image dataset of Phalaenopsis seedling. Experimental results showed that the improved model was achieved with an average inference speed of 435.8 frames per second, highly suitable for real-time applications. The improved model was also marked as one of the greatest available options for the detection of edge-based seedlings than before. The detection accuracy of the improved model reached 91.1%, particularly with an impressive precision of 91.6% to detect the small targets black tuber. The gripping points of the seedlings also validated the reliability of the improved model in practical deployments. Such high accuracy was achieved in the detection of small targets, indicating better suitability for tasks where the precise targeting of small objects was essential. In addition, the weight file size of the improved model was compressed to just 3.1 MB, particularly for the deployment on the edge devices where the storage capacity was constrained. A series of practical gripping experiments were conducted to further validate the algorithm. A success rate of 78% was obtained for the high efficiency of the improved model in real-world scenarios. The generalizability of the YOLOv8n-Aerolite algorithm was also tested on the 3D reconstructed dataset of phalaenopsis seedlings, similar to the detection of small targets. The results showed that the mAP0.5 increased by 1.6 percentage points compared to the original YOLOv8n model. The better performance of the improved model was obtained across different datasets. The cross-dataset testing confirmed that the robustness and adaptability were suitable for a variety of detection tasks. In conclusion, the YOLOv8n-Aerolite algorithm significantly advanced the field of automated crop propagation. A highly efficient, accurate, and lightweight solution was also provided for the visual detection. The finding can serve as a valuable reference to develop scalable and automated technologies, especially for small-scale crops like Phalaenopsis orchids. YOLOv8n-Aerolite can also fully meet the needs of edge computing environments, particularly for the broader applications of agricultural automation.

-

Keywords:

- deep learning /

- YOLOv8n /

- phalaenopsis seedlings /

- lightweight /

- detection speed /

- small object detection

-

0. 引 言

随着电商贸易及物流运输的快速发展,人们对发泡聚苯乙烯(expandable polystyrene,EPS)的需求急剧增长,因其质轻、比强度高、缓冲性能良好等优点而备受青睐[1]。然而,生产EPS会大量消耗石油等不可再生资源,生产过程中还会释放大量CO2温室气体。随着泡沫塑料产量与消费量增长,其废弃物数量也在与日俱增,而中国废旧泡沫塑料回收利用率偏低[2],通常采用直接焚烧或填埋等简易处理方式,前者会产生大量有害气体,造成大气污染,后者在地下难以被生物降解,极易造成土壤、水体“白色污染”,导致农作物减产、地下水污染等环境问题,危及人体或动物健康[3]。基于以上现状,开发新型可降解包装缓冲材料正在成为当前研究热点。

中国农作物秸秆资源丰富,2022年产量约8.64亿t[4],若不对其加以利用和处理,则极易造成资源浪费和环境污染。秸秆肥料化是目前实现农业秸秆综合利用的主要手段,占秸秆综合利用率的60%以上[5]。然而秸秆直接还田容易导致土地板结、播种质量差、病虫草危害等问题,间接还田及腐熟还田处理方式较为复杂且对处理技术有一定要求[6]。芦苇是农作物秸秆的一种[7],其茎秆坚韧,纤维长、木质素含量高,具备可再生、可降解和无污染等优点,过去作为造纸的重要原料而得以利用[8]。然而,造纸废水排放会直接污染天然水质,随着环境保护力度加大,芦苇造纸产业被引导退出,芦苇的大量废弃不仅浪费资源,还会导致水体富营养化,对湖区水质、景观、生态构成直接威胁[9]。中国农业秸秆综合化利用依然面临严峻挑战,亟待提高农业秸秆多途径利用率。由于农作物秸秆富含纤维素、半纤维素、木质素等成分,能够被腐生真菌分解、吸收并利用[10],因此将分解木质纤维素的真菌接种在农业秸秆上培养,制备具有一定抗压强度的菌丝复合包装缓冲材料,以期提升农业废弃物综合利用率,替代传统石油基泡沫塑料,正逐渐成为当前生物质材料研究与开发的热点。

菌丝复合材料(mycelium composite material,MCM)是一种将农业废弃物(如芦苇秆、麻秆、玉米芯等)作为培养基质,通过接入大型真菌菌株分解吸收基质营养成分,使菌丝延伸、分支、融合以形成复杂的网络结构,从而将松散的基质黏合为一体的新型生物质材料[11]。菌丝复合材料因其提高农副产物利用率、可降解、生产能耗低、环境友好等优势,近年来受到广泛关注。目前,国内外学者围绕菌株种类、基质类型、制备工艺、后处理等对材料性能进行优化,以制备抗压、抗弯、缓冲性能更佳的菌丝复合材料。例如,JONES等[12]通过菌株筛选试验,发现冬生多孔菌(Polyporus brumalis)和淡红侧耳(Pleurotus djamor)具有较高的生长率及菌丝密度,提高了菌丝复合材料生产效率。ELSACKER等[13]研究了不同基质与不同粒径对菌丝复合材料性能的影响,结果显示粒径对材料性能的影响比基质种类更明显,表明了基质粒度对材料性能的重要性。陈晨伟等[14-15]通过优化菌丝复合材料的制备工艺(接种量、水添加量、接种方法等),提升改进了材料的抗弯和缓冲性能,达到或超过发泡聚苯乙烯的类似特性。AHMADI[16]探究了培养周期对纤维素-菌丝体泡沫材料的影响,当培养周期为25 d时,材料抗压强度、能量吸收特性和热稳定性显著提高。APPELS等[17]对菌丝复合材料进行热压后处理,得到一批抗拉强度较高(0.24 MPa)、断裂应变较低(0.7%)的材料,其与木材等天然材料性质相似,为材料后处理提供了新思路。

尽管有诸多学者对不同菌株、基质进行了筛选优化,然而对菌株和基质缺乏系统的评价与筛选。另外,相关研究仅从1~2个角度对材料性能进行优化,缺乏连续性的筛选步骤。因此,本研究基于10种真菌、6种基质及其复配组合制备菌丝复合材料,以压缩强度为评价指标,依次对菌株、基质、粒径与接种量进行筛选和优化,将上一次的优化结果用于下一次试验,制备压缩强度逐渐提高的材料。本研究旨在促进菌丝复合材料在包装领域的应用,以期为提高芦苇秆等农业废弃物的综合利用率提供参考。

1. 材料与方法

1.1 试验材料与设备

试验中为菌丝生长提供营养的原料有:落麻、红麻、芦苇秆,购自中国农业科学院麻类研究所沅江试验站;棉籽壳、玉米芯、木屑、麸皮,购自市场。

其他试验材料:氧化钙、葡萄糖购自上海麦克林生化科技股份有限公司。

试验仪器与设备:超净工作台,SPX-25OBX生化培养箱,天津市泰斯特仪器有限公司;BSG-400培养箱,上海博迅实业有限公司医疗设备厂;ZQZY-B8ES振荡培养箱,上海知楚仪器有限公司;GR110DR全自动灭菌锅,美国致微仪器有限公司;SU-

3500 扫描电子显微镜,株式会社日立制作所;TX350e体视显微镜,广州金卡思贸易有限公司;GFL-70鼓风干燥箱,天津市莱玻特瑞仪器设备有限公司;RMDW-5电子万能试验机,济南锐玛机械设备有限责任公司。1.2 培养基

麦芽浸粉培养基:30 g/L麦芽浸粉、3 g/L大豆胨、15 g/L琼脂,溶于蒸馏水并定容至1 L,121 ℃灭菌15 min。

麦芽浸粉液体培养基:20 g/L麦芽浸粉、20 g/L葡萄糖、1 g/L胰蛋白胨、1 g/L发酵提取物,溶于蒸馏水并定容至1 L,121 ℃灭菌15 min[18]。

菌丝复合材料固态培养基:以基料80%、麸皮18%、氧化钙粉末2%的比例[19],按0.15 g/cm3的干料密度混合培养料,加入适量清水,使基质含水率达到70%,搅拌均匀,121 ℃灭菌2 h。

1.3 菌丝复合材料的制备

参考陈晨伟等[20]方法并稍作修改,将菌株接种至麦芽浸粉培养基上,25 ℃活化7 d。将活化的菌丝接入麦芽浸粉液体培养基,置于25 ℃、180 r/min条件下避光振荡培养7 d,制得液体菌种。以6.5%接种量将液体菌种接入生长基质,28 ℃下避光培养15 d后,将材料脱模,然后将其底部朝上在28 ℃下继续避光培养2 d后,置于60 ℃烘箱中脱水48 h,得到菌丝复合材料。

1.4 试验方法

1.4.1 优异菌株筛选

设定接种量为6.5%、培养时间为15 d,粒径为0.075~2.000 mm的红麻与棉籽壳(KCS)为复合生长基质,选取10种不同真菌菌株进行优异菌株筛选试验,这些菌株分别为无柄灵芝(Ganoderma resinaceum)、糙皮侧耳(Pleurotus ostreatus)、凤尾菇(Pleurotus sajor-caju)、血红栓菌(Trametes sanguinea)、秀珍菇(Pleurotus geesteranus)、金针菇(Flammulina filiformis)、茶树菇(Agrocybe aegerita)、胶质鳞伞(Pholiota squamosa)、香菇(Lentinus edodes)、猴头菇(Hericium erinaceus)(以上菌株均来自中国农业科学院麻类研究所)。利用万能试验机对材料试件进行压缩性能表征,筛选出一种优异菌株,适宜制备强度较高的菌丝复合材料。

1.4.2 适宜基质筛选

设定接种量为6.5%、培养时间为15 d,以优异菌株Ganoderma resinaceum为供试菌株,基质粒径为0.075 ~2.000 mm,利用17种原料进行适宜基质筛选试验,包括落麻(noil, N)、红麻(kenaf, K)、芦苇秆(reed straw, R)、棉籽壳(cotton seed shell, CS)、玉米芯(corn cob, CC)、木屑(sawdust, S)6种单配基质,及落麻与棉籽壳(NCS)、落麻与玉米芯(NCC)、落麻与木屑(NS)、红麻与芦苇秆(KR)、红麻与棉籽壳(KCS)、红麻与玉米芯(KCC)、红麻与木屑(KS)、芦苇秆与棉籽壳(RCS)、芦苇秆与玉米芯(RCC)、芦苇秆与木屑(RS)、棉籽壳与玉米芯(CSCC)11种复配基质(复配基质由质量比1:1的两种原料组成)。利用万能试验机对材料试件进行压缩性能表征,筛选出一种适宜基质,通过该种基质可得到强度较高的菌丝复合材料。

1.4.3 基质粒径筛选

设定接种量为6.5%、培养时间为15 d,以优异菌株Ganoderma resinaceum为供试菌株,适宜基质芦苇秆与玉米芯(RCC)为供试基质。参考侯佳希[21]的方法,对4种不同基质粒径开展筛选试验,粒径分组为0.850~2.000 mm、0.250~0.850 mm、0.075~0.250 mm、0.075~2.000 mm。利用万能试验机对材料试件进行压缩性能表征,筛选出一种适宜的基质粒径,适宜制备强度较高的菌丝复合材料。

1.4.4 接种量优化

设定培养时间为15 d,以优异菌株Ganoderma resinaceum为供试菌,适宜基质芦苇秆与玉米芯(RCC)为供试基质,筛选到的优异粒径0.075~2.000 mm为供试粒径。根据前期预试验结果,对6组不同接种量进行优化试验,各接种量水平为3.5%、4.5%、5.5%、6.5%、7.5%、8.5%。利用万能试验机对材料试件进行压缩性能表征,筛选出一种优异接种量,适宜制备强度较高的菌丝复合材料。

1.5 菌丝复合材料性能测试

1.5.1 体视显微镜分析

从菌丝复合材料芯部取少量待观测样品,置于体视显微镜载物台之上,调整目镜及物镜使样品位于成像中央,调节放大倍数和焦距使成像清晰,对样品结构特征及菌丝分布情况进行观察和记录。

1.5.2 扫描电子显微镜(scanning electron microscope, SEM)分析

从菌丝复合材料横截面处取少量待观测样品,用双面胶带将其固定在铜质载物台上,溅射镀金1~2 min。在真空环境下使用10 kV加速电压获取样品显微图像。

1.5.3 密 度

根据APPELS等[17]的方法,利用电子天平称量试件质量。利用游标卡尺测量试件长度与宽度,计算试件横截面积;测量试件原始厚度。依照式(1)计算试件密度:

ρ=mST×103 (1) 式中ρ为试件密度,g/cm3;m为试件质量,g;S为试件横截面积,mm2;T为试件原始厚度,mm。

1.5.4 压缩性能

根据GB/T 8813—2020《硬质泡沫塑料 压缩性能的测定》[22]中的方法A,使用万能试验机以(12±3)mm/min的速度沿菌丝复合材料厚度方向进行压缩性能测试。通过计算得到相对形变10%的压缩强度和能量吸收效率(η)-应力(σ)曲线。

σ=FS (2) 式中σ为压缩应力,kPa;F为压缩载荷,N。

ε=T−TjT×100% (3) 式中ε为压缩应变,%;Tj为试件试验后的厚度,mm。

根据陈晨伟等[14]的方法,缓冲系数计算见式(4)。

C=1η=σ∫ε0σdε (4) 式中C为缓冲系数;η为能量吸收效率。

将应力(σ)-应变(ε)曲线转换为缓冲系数(η)-应力(σ)曲线,缓冲系数越小,材料单位体积下吸收的外部能量越多,其缓冲效果越好[23]。

1.5.5 菌丝生长速度测定

在超净工作台中,用打孔器在菌丝边缘打孔,用接种钩接种至MEA平板中央,重复3次,将平板置于25 ℃培养箱避光培养。本试验采用十字交叉法测定菌丝生长速度[24],即接种后在皿底十字划线,待菌丝萌出,在其尖端标注测量最长半径r1,待菌丝生长至3、6 d时,在菌丝外缘标注测量最长半径r2,依照式(5)计算菌丝生长速度:

v = r2−r1t (5) 式中v为菌株的生长速度,mm/d;r1为菌丝萌出时尖端最长半径,mm;r2为生长t d后菌丝外缘最长半径;t为菌丝的生长时间,d。

1.6 数据分析

使用Origin 2024作图;使用SPSS 19进行方差分析,通过Duncan极差检验进行差异性分析,P<0.05表示具有显著性差异。

2. 结果与分析

2.1 优异菌株筛选

2.1.1 压缩性能分析

本研究对不同菌株制备菌丝复合材料的密度、压缩强度和最小缓冲系数进行了分析。表1结果显示,密度最大的菌丝复合材料为Ganoderma resinaceum组(0.196 g/cm3),密度最小的为Hericium erinaceus组(0.161 g/cm3),菌丝材料的密度均显著(P<0.05)高于EPS板。

表 1 不同菌株制备的菌丝复合材料压缩性能及生长速度Table 1. Compressive property and growth rate of mycelium composite materials produced from different fungi strains菌株种类

Species of fungi strains密度

Density/(g·cm−3)压缩强度

Compressive strength/kPa最小缓冲系数

Minimum buffer factor生长速度

Growth rate/(mm·d−1)EPS 0.013±0.000f 73.01±6.53c 4.85±0.18d - 无柄灵芝Ganoderma resinaceum 0.196±0.011a 88.59±2.19ab 9.06±1.35bc 7.94±0.43a 糙皮侧耳Pleurotus ostreatus 0.188±0.003ab 38.77±3.18e 8.92±0.12bc 5.69±0.19c 凤尾菇Pleurotus sajor-caju 0.192±0.005ab 48.8±11.39de 8.93±0.18bc 5.11±0.31cd 血红栓菌Trametes sanguinea 0.190±0.002ab 96.43±4.03a 9.55±0.4b 6.33±0.15b 秀珍菇Pleurotus geesteranus 0.169±0.004cde 41.76±6.62e 8.88±0.39bc 6.51±0.59b 金针菇Flammulina filiformis 0.183±0.005b 75.21±4.31bc 8.13±0.29c 5.33±0.38c 茶树菇Agrocybe aegerita 0.181±0.001bc 57.65±11.04d 9.3±0.05b 4.65±0.16d 胶质鳞伞Pholiota squamosa 0.180±0.016bcd 16.19±2.08g 10.69±0.95a 2.47±0.35f 香菇Lentinus edodes 0.169±0.008de 36.34±14.83ef 8.77±0.68bc 3.01±0.02e 猴头菇Hericium erinaceus 0.161±0.005e 23.53±1.87fg 9.23±0.3b 3.03±0.03e 注:EPS为发泡聚苯乙烯。所有数值均表示为平均值±标准差;同一列中不同小写字母表示具有显著性差异 (P<0.05);压缩强度为形变量的10%,下同。 Note: EPS is expandable polystyrene; all values are expressed as mean ± standard deviation; different lowercase letters in the same column represents significant difference

(P<0.05); compressive strength are 10% of deformation quantity, the same as below.Ganoderma resinaceum和Trametes sanguinea组在相对形变10%的压缩强度显著(P<0.05)高于其他菌株(88.59和96.43 kPa),分别为EPS板的1.21和1.32倍。EPS板的最小缓冲系数显著低于所测的菌丝材料(P<0.05),当在不同菌株间比较时,除Flammulina filiformis组最小缓冲系数显著低于Pholiota squamosa、Agrocybe aegerita组外,其余菌株对菌丝复合材料的最小缓冲系数影响不显著。以上结果表明,不同菌株种类对菌丝复合材料的密度、压缩强度、缓冲性能产生显著性差异。这可能是因为不同菌株具有不同木质纤维素降解酶系统,使得木质纤维素的降解程度出现差异,进而对材料密度和力学性能造成影响[10]。菌丝生长速度也是影响材料抗压性能的因素之一,表1结果表明,Ganoderma resinaceum在MEA平板上的生长速度显著(P<0.05)高于其他菌株。生长速度较快的菌株能延伸、分支出数量较多的菌丝,在基质间建立较复杂的菌丝网络结构,使得菌丝与基质的粘结性增强,当材料受压时具备较高的抗压强度,意味着菌丝生长速度与材料抗压性能成正相关关系。李享[25]利用杏鲍菇、玉木耳、秀珍菇、香菇、元蘑、白灵菇、姬菇7种菌株制备菌丝复合材料,并对不同菌株生长速度进行测定,结果表明生长速度最快的杏鲍菇(1.21 cm/d)对应材料的抗压性能最佳,该结果与本研究相似。综上,基于Ganoderma resinaceum组和Trametes sanguinea组菌丝复合材料较高的压缩强度,而Trametes sanguinea的红色菌丝和孢子易对试验环境产生不良影响,因此选择Ganoderma resinaceum为优异菌株的筛选结果,并用于后续试验。

2.1.2 外观及微观形态分析

根据表1差异性分析结果,分别将压缩强度在a~b、c~d、e~f的菌株定为高、中、低3个水平,选择Ganoderma resinaceum、Pleurotus sajor-caju、Hericium erinaceus为高、中、低水平的代表菌株,对该3种材料的外观、体视显微结构、SEM微观结构进行分析,以阐明材料外观及微观结构与压缩性能的关系。通过分析样品外观形态(图1)可知,Ganoderma resinaceum组表面菌层致密、厚实且完整,具有泡沫质感,白色与黄褐色相间,可能与烘干时真菌细胞壁和植物材料中糖与蛋白质发生美拉德反应有关[17]。Pleurotus sajor-caju组表面几乎为致密厚实的白色菌层,同样具备泡沫质感。然而,Hericium erinaceus组材料菌丝层稀薄且不完整,裸露出褐色松散的基质。材料成型过程中,表面菌丝接触更充足的氧气,可形成一层致密的保护性菌丝层[26]。在材料压缩过程中,这种保护性菌层使其在相同应变下承受更高载荷,因此Ganoderma resinaceum和Pleurotus sajor-caju具备较高和中等水平的压缩强度。然而,Hericium erinaceus组材料表面菌丝层不完整,松散的基质颗粒易碎裂脱落,形状稳定性较差,承载能力较弱。

如图1体视显微结构和SEM微观结构可知,Ganoderma resinaceum组的菌丝浓密程度最高,基质间隙几乎被白色菌丝填充,菌丝网络密度较其他两组更高,网络结构更复杂;Pleurotus sajor-caju组菌丝浓密程度较低,在部分基质间形成稀薄的菌丝网络;Hericium erinaceus组仅有少量菌丝附着于基质上,未见联结基质颗粒的网络结构。这可能归因于Ganoderma resinaceum较快的生长速度,在相同培养时间内菌丝网络蔓延范围更广,菌丝分支数量更多。菌丝在生长过程中相互搭接,在节点处粘结在一起,随着菌丝间相互聚集交缠,形成复杂的三维网状结构。基于Ganoderma resinaceum组较稠密的菌丝网络结构和较高的压缩强度水平,则网络结构的密度可能与压缩强度成正相关[27]。

2.2 适宜基质筛选

2.2.1 不同基质菌丝复合材料压缩性能分析

本研究对不同基质制备菌丝复合材料的密度、压缩强度和最小缓冲系数进行了分析。表2结果显示,密度最大的菌丝复合材料为NCS组(0.262 g/cm3),密度最小的为K组(0.114 g/cm3),菌丝材料的密度均显著(P<0.05)高于EPS板。RCC组在相对形变10%的压缩强度显著(P<0.05)高于其他基质(140.56 kPa),为EPS板的5.97倍。EPS板的最小缓冲系数显著低于所测的菌丝材料(P<0.05),当在不同基质间比较时,除NS、KS、CC组最小缓冲系数显著低于NCS、NCC、S组外,其余基质对菌丝复合材料的最小缓冲系数影响不显著。以上结果表明,不同基质种类对菌丝复合材料的密度、压缩强度、缓冲性能产生显著性差异。这可能是因为不同基质的来源、类型、植株种类、组织成熟度均有不同,这些因素会影响基质中纤维素、半纤维素和木质素的含量及比例,其通过影响菌丝体的生长,使菌丝复合材料的抗压性能表现出差异[10]。PENG等[28]利用稻秆、甘蔗渣、椰皮髓、木屑、玉米秸秆5种农业废弃物制备菌丝复合材料,对不同基质组材料的各项性能进行表征,结果表明材料密度与压缩强度成正相关关系,木屑组材料的抗压强度(456.70 kPa)显著高于玉米秸秆组(270.31 kPa),该结果与本研究相似。然而,当前相关研究多采用木屑、锯末、棉籽壳、玉米秸秆、稻草、甘蔗渣等作为基质进行筛选和优化[10,21,26-27],却鲜有利用芦苇秆制成菌丝复合材料的案例,后者对于拓展芦苇副产物综合利用途径、改善湖泊沼泽生态环境以及提高区域经济效益具有积极作用。综上,基于RCC组菌丝复合材料较高的压缩强度,确定其为基质筛选结果,用于后续试验。

表 2 不同基质制备的菌丝复合材料压缩性能Table 2. Compressive property of mycelium composite materials produced from different substrates基质种类

Categories of substrates密度

Density/

(g·cm−3)压缩强度

Compressive strength/kPa最小缓冲系数

Minimum buffer factorEPS 0.006±0.000h 23.54±10.09i 5.53±0.04c NCS 0.262±0.012a 65.20±11.71ef 8.65±0.45a NCC 0.209±0.035abcde 76.34±5.39de 8.58±1.55a NS 0.222±0.009abcd 109.38±14.85b 7.17±0.57b KR 0.157±0.042efg 80.39±8.12de 8.12±0.23ab KCS 0.183±0.017bcdef 82.92±5.04cd 7.9±0.53ab KCC 0.166±0.006cdefg 73.37±7.41de 7.6±1.28ab KS 0.175±0.008cdefg 85.66±13.70cd 7.07±0.49b RCS 0.232±0.027abc 66.99±6.91ef 7.66±0.28ab RCC 0.232±0.026abc 140.56±20.59a 8.21±0.42ab RS 0.202±0.017abcde 81.07±9.57de 8.17±0.59ab CSCC 0.246±0.016ab 96.96±7.08bc 8.04±0.66ab N 0.132±0.070fg 65.49±9.43ef 7.9±0.98ab K 0.114±0.049g 43.77±7.92gh 8±0.59ab R 0.134±0.071fg 37.88±7.41h 8.03±1.17ab CS 0.186±0.115bcdef 45.55±6.09gh 8.01±0.19ab CC 0.156±0.087defg 73.43±1.61de 7.16±0.56b S 0.147±0.086efg 52.99±4.07fg 8.55±1.03a 注:NCS为落麻与棉籽壳,NCC为落麻与玉米芯,NS为落麻与木屑,KR为红麻与芦苇秆,KCS为红麻与棉籽壳,KCC为红麻与玉米芯,KS为红麻与木屑,RCS为芦苇秆与棉籽壳,RCC为芦苇秆与玉米芯,RS为芦苇秆与木屑,CSCC为棉籽壳与玉米芯,N为落麻,K为红麻,R为芦苇秆,CS为玉米芯,CC为玉米芯,S为木屑,下同。 Note: NCS is noil and cotton seed shell, NCC is noil and corn cob, NS is noil and sawdust, KR is kenaf and reed straw, KCS is kenaf and cotton seed shell, KCC is kenaf and corn cob, KS is kenaf and sawdust, RCS is reed straw and cotton seed shell, RCC is reed straw and corn cob, RS is reed straw and sawdust, CSCC is cotton seed shell and corn cob, N is noil, K is kenaf, R is reed straw, CS is corn cob, CC is corn cob, S is sawdust, the same as below. 2.2.2 不同基质菌丝复合材料外观及微观形态分析

根据表2差异性分析结果,分别将压缩强度在a~c、d~e、f~h的基质定为高、中、低3个水平,选择RCC、CC、R组为高、中、低水平的代表基质,对该3种材料的外观、体视显微结构、SEM微观结构进行分析,以阐明材料外观及微观结构与压缩性能的关系。通过分析样品外观形态(图2)可知,不同材料表面均形成致密、完整的保护性菌丝层,呈白色至棕褐色。其中RCC组菌丝层为3组中最厚实且富有弹性;CC组菌丝层厚度次之,R组则厚度较薄,触感较粗糙。

如图2体视显微结构可知,RCC组菌丝密度较高,基质颗粒间分布的白色菌丝团块较浓厚;R组菌丝密度次之,菌丝体较稀薄,呈雾状覆盖于基质之上;CC组菌丝密度较低,菌丝体呈点状或线状附着于基质上。不同基质会使菌丝复合材料在形态学和力学性能上表现出差异,这是因为不同植物种类、不同类型、不同产地的基质会影响菌丝体多糖、脂质、几丁质等的含量及比例[10],进而影响菌丝体生长状态及菌丝网络结构的形成,并通过相关性能测试表现出来[29]。

如图2 SEM微观结构可知,RCC组材料内部形成了密度较高的菌丝网络结构,R组网络结构密度次之,CC组网络结构密度较低。尽管CC组难以形成稠密的网络结构,但其在材料表面形成了较厚的菌丝层,说明玉米芯能提供较丰富的营养物质,促使菌丝良好生长;尽管R组表面菌层较稀薄,但其能为菌丝融合与联结提供锚点,以构成复杂的菌丝网络结构。因此,将芦苇秆(R)和玉米芯(CC)两种基质复配后,通过发挥各自优势,使复配基质的压缩强度较2种单配基质有了显著提升。

2.3 基质粒径筛选

本研究对不同基质粒径制备菌丝复合材料的密度、压缩强度和最小缓冲系数进行了分析,表3结果显示,密度最大的菌丝复合材料为0.075~2.000 mm组(0.239 g/cm3),密度最小为0.850~2.000 mm组(0.175 g/cm3);0.075~2.000 mm组的菌丝复合材料在相对形变10%的压缩强度(189.44 kPa)显著(P<0.05)高于其他粒径组,最小缓冲系数显著低于0.850~2.000、0.250~0.850 mm组。以上结果表明,不同基质粒径对菌丝复合材料的密度、压缩强度和最小缓冲系数产生显著性差异。菌丝复合材料的密度、压缩强度随着基质粒径的减小而增大,并在0.075~2.000 mm组中达到最高水平,材料的密度变化趋势与压缩强度呈正相关关系,这和EPS密度与抗压强度的关系相似[30]。菌丝复合材料的最小缓冲系数随着基质粒径的减小而减小,并在0.075~2.000 mm组中达到最低水平,意味着0.075~2.000 mm组材料对外力的缓冲效果最佳。造成以上试验现象的原因可能是,当粒径较大时,基质颗粒间隙较大,菌丝在生长过程中难以完全填充这些孔隙,导致材料支撑外力的作用有限。当粒径减小,基质间隙随之减小,颗粒比表面积增大,这使菌丝转化吸收更多营养物质,遍布并填充较多孔隙,使得材料的抗压性能提高。然而,随着菌丝不断生长,较小的孔隙被快速填充,材料内部空气量迅速下降,菌丝的生长进程受到制约。而0.075~2.000 mm组较广的粒径范围既能使菌丝获取足够营养,也为菌丝提供了较高空气量,因此菌丝得以迅速生长、填充孔隙,使材料的抗压性能得到进一步提高。侯佳希[21]通过粒度筛选试验发现0.250~0.850 mm玉米秸秆下的多孔菌丝材料压缩强度较高(93.02 kPa),而本研究中0.075~2.000 mm粒度下菌丝复合材料压缩强度较之提高了51%。综上,0.075~2.000 mm组因其较高的压缩强度及优异的缓冲性能,确定为基质粒径筛选结果,用于后续试验。

表 3 不同基质粒径制备的菌丝复合材料压缩性能Table 3. Compressive property of mycelium composite materials produced from different partical sizes of substrate基质粒径

Partical sizes of substrate/mm密度

Density/(g·cm−3)压缩强度

Compressive strength/kPa最小缓冲系数

Minimum buffer factor0.850~2.000 0.175±0.007c 61.01±7.47c 9.78±0.58a 0.250~0.850 0.176±0.005c 84.26±16.37c 9.48±0.47a 0.075~0.250 0.197±0.008b 129.12±37.04b 8.99±1.10ab 0.075~2.000 0.239±0.005a 189.44±31.54a 8.15±0.36b 2.4 接种量优化

本研究对不同接种量制备菌丝复合材料的密度、压缩强度与最小缓冲系数进行了分析,表4结果显示,密度最大为7.5%组(0.248 g/cm3),最小为5.5%组(0.210 g/cm3),材料密度随接种量增大呈逐渐上升的变化趋势。在相对形变10%时,接种量7.5%组材料的压缩强度(185.22 kPa)最高,材料压缩强度随接种量增大呈先升高后降低再升高再降低的变化趋势。接种量7.5%组材料的最小缓冲系数达到最低,除3.5%、7.5%、8.5%组的最小缓冲系数显著(P<0.05)低于4.5%组外,其余组间差异不显著(P>0.05)。

表 4 不同接种量下菌丝复合材料的压缩性能Table 4. Density and compressive strength of mycelium composite materials at different inoculation amounts接种量

Inoculation amounts/%密度

Density/(g·cm−3)压缩强度

Compressive strength/kPa最小缓冲系数

Minimum buffer factor3.5 0.222±0.005bc 146.08±26.91bc 8.52±1.08b 4.5 0.222±0.009bc 161.80±13.96ab 11.75±2.43a 5.5 0.210±0.016c 116.30±21.74c 9.55±1.22ab 6.5 0.217±0.006bc 123.86±16.32c 9.32±2.7ab 7.5 0.248±0.022a 185.22±20.09a 8.41±0.94b 8.5 0.233±0.017ab 143.76±23.66bc 8.81±1.66b 当接种量较低时(3.5%),有限的菌种仅形成较稀疏的菌丝网络,此时基质起主要抗压作用,材料压缩强度较低;当接种量升高时(4.5%、7.5%),菌种的增多使菌丝扩大生长范围、填充较多基质孔隙,建立稠密的菌丝网络结构,此时主要由菌丝体和基质抵抗外部载荷,压缩强度达到较高水平;然而,当接种量上升至8.5%时,过多的菌种使菌丝相互竞争营养源和空气量,部分菌丝体生长受到一定程度的限制,菌丝网络结构密度下降,导致材料的抗压性能降低。陈晨伟等[14]研究了不同接种量水平(5%、10%、15%)对菌丝体材料性能的影响,结果表明材料的弯曲强度及缓冲性能随接种量增大呈先上升后下降的趋势,当接种量为10%时材料性能表现最佳,这与本研究结论相似。

综上,7.5%接种量因其较高的压缩强度,确定为接种量优化结果,用于后续试验。

2.5 菌丝复合材料与EPS对比试验

通过上述筛选与优化试验,得到一套制备菌丝复合材料的最佳工艺参数:Ganoderma resinaceum为供试菌株,芦苇秆与玉米芯(RCC)为复配基质,粒径范围为0.075~2.000 mm,接种量为7.5%。为了对比该工艺参数下菌丝复合材料与EPS板抗压性能的区别,将Ganoderma resinaceum接种至RCC基质上,经培养、干燥等流程制得菌丝复合材料,设置4种不同密度的EPS板为对照组,利用万能试验机对菌丝复合材料和EPS板进行压缩性能表征。表5结果显示,菌丝复合材料的密度、压缩强度、最小缓冲系数均显著(P<0.05)高于4种不同密度的EPS板,不同密度EPS间缓冲性能差异不明显。

表 5 最佳工艺参数下菌丝复合材料及EPS的压缩性能Table 5. Compressive property of mycelium composite materials and EPS at optimal process parameters试验组

Experimental groups密度

Density/(g·cm−3)压缩强度

Compressive strength/kPa最小缓冲系数

Minimum buffer factor菌丝复合材料 0.218±0.008a 190.99±40.49a 7.85±0.65a 7.5 kg·m−3 EPS 0.008±0.000c 33.54±0.75c 5.53±0.04b 10.0 kg·m−3 EPS 0.010±0.000bc 52.18±0.85c 5.12±0.05b 12.0 kg·m−3 EPS 0.012±0.000bc 65.8±2.42bc 5.15±0.29b 18.0 kg·m−3 EPS 0.018±0.000b 100.77±1.79b 5.01±0.21b 以上对比试验结果表明,菌丝复合材料具备比EPS更优异的压缩强度,结合其可生物降解、生产能耗低、环境友好等优势,具有替代EPS的潜力。然而,该材料仍存在密度较大、防水性能差等缺点,限制了材料的进一步推广与应用,是相关领域未来亟待解决的问题。另外,有学者试图将其开发为建筑材料[25]、防水材料[31]、过滤材料[16]、吸声材料[32]、内饰材料[25]、隔热材料[33]等,以进一步延伸菌丝复合材料的应用途径,为今后的研究方向提供了新的思路。

3. 结论

本研究对菌株种类、基质种类、基质粒径和接种量进行筛选和优化试验,以压缩强度为评价指标,制得不同菌丝复合材料,通过分析比对材料结构与性能,确定了最佳菌株与基质组合、基质粒径及接种量水平,得到以下主要结论:

1)菌丝复合材料的压缩强度与其外观特征、微观结构具有一定相关性,压缩强度越高,材料表面菌丝层越致密、完整度越高,菌丝网络结构的密度及复杂程度越高。将芦苇秆(R)和玉米芯(CC)组配为复合基质(RCC),通过发挥二者各自优势(玉米芯提供充足营养,芦苇秆促进菌丝网络结构生成)可得到压缩性能良好的菌丝复合材料。

2)由筛选及优化结果可知,采用Ganoderma resinaceum为供试菌株、芦苇秆与玉米芯(RCC)为培养基质,在基质粒径为0.075~2.000 mm、接种量为7.5%的工艺参数下能制得压缩强度较高、缓冲性能良好的菌丝复合材料。由以上最佳工艺参数制备菌丝复合材料的压缩强度达到190.99 kPa,为18.0 kg/m3 发泡聚苯乙烯(expandable polystyrene, EPS)的1.90倍;材料密度和最小缓冲系数显著(P<0.05)高于不同密度EPS板,需进一步研究以降低菌丝复合材料的密度,并提升其缓冲性能。

-

表 1 训练参数设置

Table 1 Training parameter setting

参数 Parameter 参数值 Value 图像尺度 Images size 640 批数量 Batch size 8 迭代次数 Epoch 150 学习率 Learning rate 0.01 动量 Momentum 0.937 权重衰减次数 Weight_decay 0.0005 表 2 消融试验结果

Table 2 Results of ablation experiments

试验编号

Test No.主干网络改进

Backbone

improvements颈部网络改进

Neck network

improvements头部网络改进

Head network

improvements平均精度均值

mAP0.5/%参数量

Params/M浮点运算数

FLOPs/G权重文件大小

Weights file size/MB推理速度

Inference speed

FPS/(帧·s−1)1 − − − 90.4 3.006 8.1 6.2 182.3 2 √ − − 90.6 2.284 6.5 4.8 116.2 3 − √ − 91.1 2.806 7.7 5.9 171.2 4 − − √ 89.8 2.362 6.5 4.9 352.7 5 √ √ − 89.6 2.084 6.1 4.4 150.2 6 √ − √ 90.5 1.641 5.0 3.5 390.0 7 − √ √ 90.1 2.162 6.1 4.5 106.0 8 √ √ √ 91.1 1.440 4.6 3.1 435.8 注:√表示使用该模块,-表示不使用该模块,基线模型为YOLOv8n。下同。 Note: √ indicates the use of the module, and - indicates the non use of the module, base model is YOLOv8n. The same below. 表 3 超参数对比试验结果

Table 3 Hyper parameter comparison experiment results

试验

编号

Test

No.学习率

Learning

rate动量

Momentum权重衰减

Weight_decay平均精度均值

mAP0.5/%推理速度

Inference speed

FPS/(帧·s−1)1 0.005 0.937 0.0005 90.2 367.2 2 0.020 0.937 0.0005 89.4 233.1 3 0.010 0.920 0.0005 91.0 75.6 4 0.010 0.950 0.0005 90.5 79.1 5 0.010 0.937 0.0002 90.3 81.2 6 0.010 0.937 0.0010 90.2 236.4 7 0.010 0.937 0.0005 91.1 435.8 表 4 主干网络对比试验结果

Table 4 Backbone network comparison experiment results

表 5 头部网络对比试验结果

Table 5 Head network comparison experiment results

头部网络

Head network平均精度均值

mAP0.5/%精度Precsion/% 推理速度

Inference speed

FPS/(帧·s−1)叶片

Blade胚根

Stem黑色块茎

Black tuberDetect_DyHead[28] 90.1 98.4 82.4 89.6 118.6 Detect_AFPN[29] 89.8 97.5 81.9 89.6 72.1 Detect_Aux[30] 89.4 99.2 81.5 87.6 124.8 Detect_Seam[31] 89.5 97.5 89.3 81.7 143.2 Detect_LSCD 91.1 97.1 84.5 91.6 435.8 表 6 多种模型检测效果对比

Table 6 Comparison of detection effect of multiple models

模型

Model精度Precsion/% 平均精度均值

mAP0.5/%召回率

Recall/%权重文件大小

Weights file size/MB推理速度

Inference speed

FPS/(帧·s−1)参数量

Params/M浮点运算数量

FLOPs/G叶片

Blade胚根

Stem黑色块茎

Black tuberYOLOv3 97.9 71.3 84.4 84.4 79.5 207.8 122.3 103.732 282.2 YOLOv5 99.2 73.8 83.5 85.5 81.7 5.3 277.3 2.504 7.1 YOLOv6 98.8 71.7 81.8 84.1 80.2 8.7 263.2 4.234 11.8 Faster-RCNN 98.2 73.1 86.9 86.0 58.9 315.1 1.1 41.761 196.7 YOLOv8n 98.7 88.8 83.7 90.4 86.1 6.2 182.3 3.006 8.1 YOLOv8n-ghost 97.6 81.4 88.5 89.2 85.7 3.8 80.6 1.714 5.0 YOLOv5-ShuffleNet 96.9 80.4 75.5 84.3 79.0 3.4 344.8 1.571 4.6 YOLOv9 99.4 70.9 85.7 85.3 84.1 102.8 206.7 45.372 207.9 YOLOv8n-Aerolite 97.3 84.3 91.6 91.1 86.4 3.1 435.8 1.440 4.6 表 7 三维重建对比试验

Table 7 Comparison experiment of three-dimensional reconstruction

模型Model 平均精度均值

mAP0.5/%召回率

Recall/%推理速度

Inference speed

FPS/(帧·s−1)YOLOv8n 89.2 84.6 206.4 YOLOv8n-Aerolite 90.8 86.0 291.2 表 8 标定控制与部署本模文型检测对比

Table 8 Comparison of calibration control and deployment of this paper's model detection

夹取方案Gripping strategy 夹取成功率Success rate/% 标定控制Calibration control 32 模型部署Model deployment 78 -

[1] LI C, DONG N, ZHAO Y M, et al. A review for the breeding of orchids: Current achievements and prospects[J]. Horticultural Plant Journal, 2021, 7(5): 380-392. doi: 10.1016/j.hpj.2021.02.006

[2] 马战林,文枫,周颖杰,等. 基于作物生长模型与机器学习算法的区域冬小麦估产[J]. 农业机械学报,2023,54(6):136-147. MA Zhanlin, WEN Feng, ZHOU Yingjie, et al. Regional winter wheat yield estimation based on crop growth models and machine learning algorithms[J]. Transactions of the Chinese Society for Agricultural Machinery, 2023, 54(6): 136-147. (in Chinese with English abstract)

[3] 张领先,田潇,李云霞,等. 可见光光谱和机器学习的温室黄瓜霜霉病严重度定量估算[J]. 光谱学与光谱分析,2020,40(1):227-232. ZHANG Lingxian, TIAN Xiao, LI Yunxia, et al. Quantitative estimation of downy mildew severity in greenhouse cucumbers using visible light spectrum and machine learning[J]. Spectroscopy and Spectral Analysis, 2020, 40(1): 227-232. (in Chinese with English abstract)

[4] 张开兴,吕高龙,贾浩,等. 基于图像处理和BP神经网络的玉米叶部病害识别[J]. 中国农机化学报,2019,40(8):122-126. ZHANG Kaixing, LV Gaolong, JIA Hao, et al. Identification of corn leaf diseases based on image processing and bp neural network[J]. Journal of Chinese Agricultural Mechanization, 2019, 40(8): 122-126. (in Chinese with English abstract)

[5] 李天华,孙萌,丁小明,等. 基于YOLOv4+HSV的成熟期番茄识别方法[J]. 农业工程学报,2021,37(21):183-190. LI Tianhua, SUN Meng, DING Xiaoming, et al. Maturity stage tomato recognition method based on YOLOv4+HSV[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2021, 37(21): 183-190. (in Chinese with English abstract)

[6] 刘毅君,何亚凯,吴晓媚,等. 基于改进Faster R-CNN的马铃薯发芽与表面损伤检测方法[J]. 农业机械学报,2024,55(1):371-378. LIU Yijun, HE Yakai, WU Xiaomei, et al. Detection method for potato sprouting and surface damage based on improved Faster R-CNN[J]. Transactions of the Chinese Society for Agricultural Machinery, 2024, 55(1): 371-378. (in Chinese with English abstract)

[7] LE V N T, TRUONG G, ALAMEH K. Detecting weeds from crops under complex field environments based on Faster RCNN[C]//2020 IEEE Eighth International Conference on Communications and Electronics (ICCE). Phu Quoc Island, Vietnam: IEEE, 2021: 350-355.

[8] 杨坚,钱振,张燕军,等. 采用改进YOLOv4-tiny的复杂环境下番茄实时识别[J]. 农业工程学报,2022,38(9):215-221. YANG Jian, QIAN Zhen, ZHANG Yanjun, et al. Real-time tomato recognition in complex environments using improved YOLOv4-tiny[J], Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2022, 38(9): 215-221. (in Chinese with English abstract)

[9] ZHOU Z X, SONG Z Z, FU L S, et al. Real-time kiwifruit detection in orchard using deep learning on Android™ smartphones for yield estimation[J]. Computers and Electronics in Agriculture, 2020, 179: 105856. doi: 10.1016/j.compag.2020.105856

[10] YAN B, FAN P, LEI X, et al. A real-time apple targets detection method for picking robot based on improved YOLOv5[J]. Remote Sensing, 2021, 13(9): 1619. doi: 10.3390/rs13091619

[11] 范天浩,顾寄南,王文波,等. 基于改进YOLOv5s的轻量化金银花识别方法[J]. 农业工程学报,2023,39(11):192-200. FAN Tianhao, GU Jinan, WANG Wenbo, et al. Lightweight honeysuckle recognition method based on improved YOLOv5s[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2023, 39(11): 192-200. (in Chinese with English abstract)

[12] 许鑫,张力,岳继博,等. 农田环境下无人机图像并行拼接识别算法[J]. 农业工程学报,2024,40(9):154-163. doi: 10.11975/j.issn.1002-6819.202308207 XU Xin, ZHANG Li, YUE Jibo, et al. Parallel mosaic recognition algorithm for UAV images in farmland environment[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2024, 40(9): 154-163. (in Chinese with English abstract) doi: 10.11975/j.issn.1002-6819.202308207

[13] 王政,许兴时,华志新,等. 融合YOLOv5n与通道剪枝算法的轻量化奶牛发情行为识别[J]. 农业工程学报,2022,38(23):130-140. Wang Zheng, Xu Xingshi, Hua Zhixin, et al. Lightweight recognition for the oestrus behavior of dairy cows combining YOLOv5n and channel pruning[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2022, 38(23): 130-140. (in Chinese with English abstract)

[14] 岳凯,张鹏超,王磊,等. 基于改进YOLOv8n的复杂环境下柑橘识别[J]. 农业工程学报,2024,40(8):152-158. YUE Kai, ZHANG Pengchao, WANG Lei, et al. Recognizing citrus in complex environment using improved YOLOv8n[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2024, 40(8): 152-158. (in Chinese with English abstract)

[15] XIAO B, NGUYEN M, YAN W Q. Fruit ripeness identification using YOLOv8 model[J]. Multimedia Tools and Applications, 2024, 83(9): 28039-28056.

[16] WANG K, LIEW J H, ZOU Y, et al. Panet: Few-shot image semantic segmentation with prototype alignment[C]//Proceedings of the IEEE/CVF international conference on computer vision. Paris, France: IEEE, 2019: 9197-9206.

[17] 高昂,梁兴柱,夏晨星,等. 一种改进YOLOv8的密集行人检测算法[J]. 图学学报,2023,44(5):890-898. GAO Ang, LIANG Xingzhu, XIA Chenxing, et al. An improved dense pedestrian detection algorithm for YOLOv8[J]. Journal of Graphics, 2023, 44(5): 890-898. (in Chinese with English abstract)

[18] 吴湘宁,贺鹏,邓中港,等. 一种基于注意力机制的小目标检测深度学习模型[J]. 计算机工程与科学,2021,43(1):95-104. doi: 10.3969/j.issn.1007-130X.2021.01.012 WU Xiangning, HE Peng, DENG Zhonggang, et al. A deep learning model for small target detection based on attention mechanism[J]. Computer Engineering and Science, 2021, 43(1): 95-104. (in Chinese with English abstract) doi: 10.3969/j.issn.1007-130X.2021.01.012

[19] 陈超,吴斌. 一种改进残差深度网络的多目标分类技术[J]. 计算机测量与控制,2023,31(7):199-206. CHEN Chao, WU Bin. An improved residual depth network for multi-target classification[J]. Computerized Measurement and Control, 2023, 31(7): 199-206. (in Chinese with English abstract)

[20] 王大阜,王静,石宇凯,等. 基于深度迁移学习的图像隐私目标检测研究[J]. 图学学报,2023,44(6):1112-1120. WANG Dafu, WANG Jing, SHI Yukai, et al. Research on image privacy target detection based on deep migration learning[J]. Journal of Graphics, 2023, 44(6): 1112-1120. (in Chinese with English abstract)

[21] DONG X, LIU Y, DAI J, Concrete surface crack detection algorithm based on improved YOLOv8[J]. Sensors, 2024, 24(16): 5252.

[22] ZHANG Z, LI Y, BAI Y, et al. Convolutional graph neural networks-based research on estimating heavy metal concentrations in a soil-rice system[J]. Environmental Science and Pollution Research, 2023, 30(15): 44100-44111. doi: 10.1007/s11356-023-25358-1

[23] LIN T Y, GOYAL P, GIRSHICK R, et al. Focal loss for dense object detection[C]//Proceedings of the IEEE international conference on computer vision. Venice, Italy: IEEE, 2017: 2980-2988.

[24] HAN K, WANG Y, TIAN Q, et al. Ghostnet: More features from cheap operations[C]//Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition. Seattle, WA, USA: IEEE, 2020: 1580-1589.

[25] WANG J, LIAO X, WANG Y, et al. M-SKSNet: Multi-Scale spatial kernel selection for image segmentation of damaged road markings[J]. Remote Sensing, 2024, 16(9): 1476. doi: 10.3390/rs16091476

[26] CAI J, LIU X, HUANG R, et al. A review of research intrusion monitoring technology for railway carrier equipment[C]//International Conference on Electrical and Information Technologies for Rail Transportation. Singapore: Springer Nature Singapore, 2023: 546-554.

[27] 张利丰,田莹. 改进YOLOv8的多尺度轻量型车辆目标检测算法[J]. 计算机工程与应用,2024,60(3):129-137. ZHANG Lifeng, TIAN Ying. Improved multi-scale lightweight vehicle target detection algorithm for YOLOv8[J]. Computer Engineering and Applications, 2024, 60(3): 129-137. (in Chinese with English abstract)

[28] ZHANG C, ZHANG Y, CHANG Z, et al. Sperm YOLOv8E-TrackEVD: A novel approach for sperm detection and tracking[J]. Sensors, 2024, 24(11): 3493. doi: 10.3390/s24113493

[29] YANG G, LEI J, ZHU Z, et al. AFPN: asymptotic feature pyramid network for object detection[C]//2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC). Oahu, Hawaii: IEEE, 2023: 2184-2189.

[30] WANG C Y, BOCHKOVSKIY A, LIAO H Y M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors[C]//Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition. Music City Center, Nashville TN: IEEE, 2023: 7464-7475.

[31] LI Y, ZENG J, SHAN S, et al. Occlusion aware facial expression recognition using CNN with attention mechanism[J]. IEEE Transactions on Image Processing, 2018, 28(5): 2439-2450.

[32] 郝建军,邴振凯,杨淑华,等. 采用改进YOLOv3算法检测青皮核桃[J]. 农业工程学报,2022,38(14):183-190. HAO Jianjun, BING Zhenkai, YANG Shuhua, et al. Detection of green walnut by improved YOLOv3[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2022, 38(14): 183-190. (in Chinese with English abstract)

[33] 徐杨,熊举举,李论,等. 采用改进的YOLOv5s检测花椒簇[J]. 农业工程学报,2023,39(16):283-290. XU Yang, XIONG Juju, LI Lun, et al. Detecting pepper cluster using improved YOLOv5s[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2023, 39(16): 283-290. (in Chinese with English abstract).

[34] NORKOBIL SAYDIRASULOVICH S, ABDUSALOMOV A, JAMIL M K, et al. A YOLOv6-based improved fire detection approach for smart city environments[J]. Sensors, 2023, 23(6): 3161. doi: 10.3390/s23063161

[35] 王崴,洪学峰,雷松贵. 基于MR的机电装备智能检测维修[J]. 图学学报,2022,43(1):141-148. WANG Wei, HONG Xuefeng, LEI Songgui. Intelligent inspection and maintenance of electromechanical equipment based on MR[J]. Journal of Graphics, 2022, 43(1): 141-148. (in Chinese with English abstract)

[36] LU D, WANG Y. MAR-YOLOv9: A multi-dataset object detection method for agricultural fields based on YOLOv9[J]. Plos One, 2024, 19(10): e0307643. doi: 10.1371/journal.pone.0307643

下载:

下载: